I, Chatbot

Editor’s Note: This is an excerpt of a full report. To read the entire analysis with endnotes, click here to download the report as a PDF.

Executive Summary

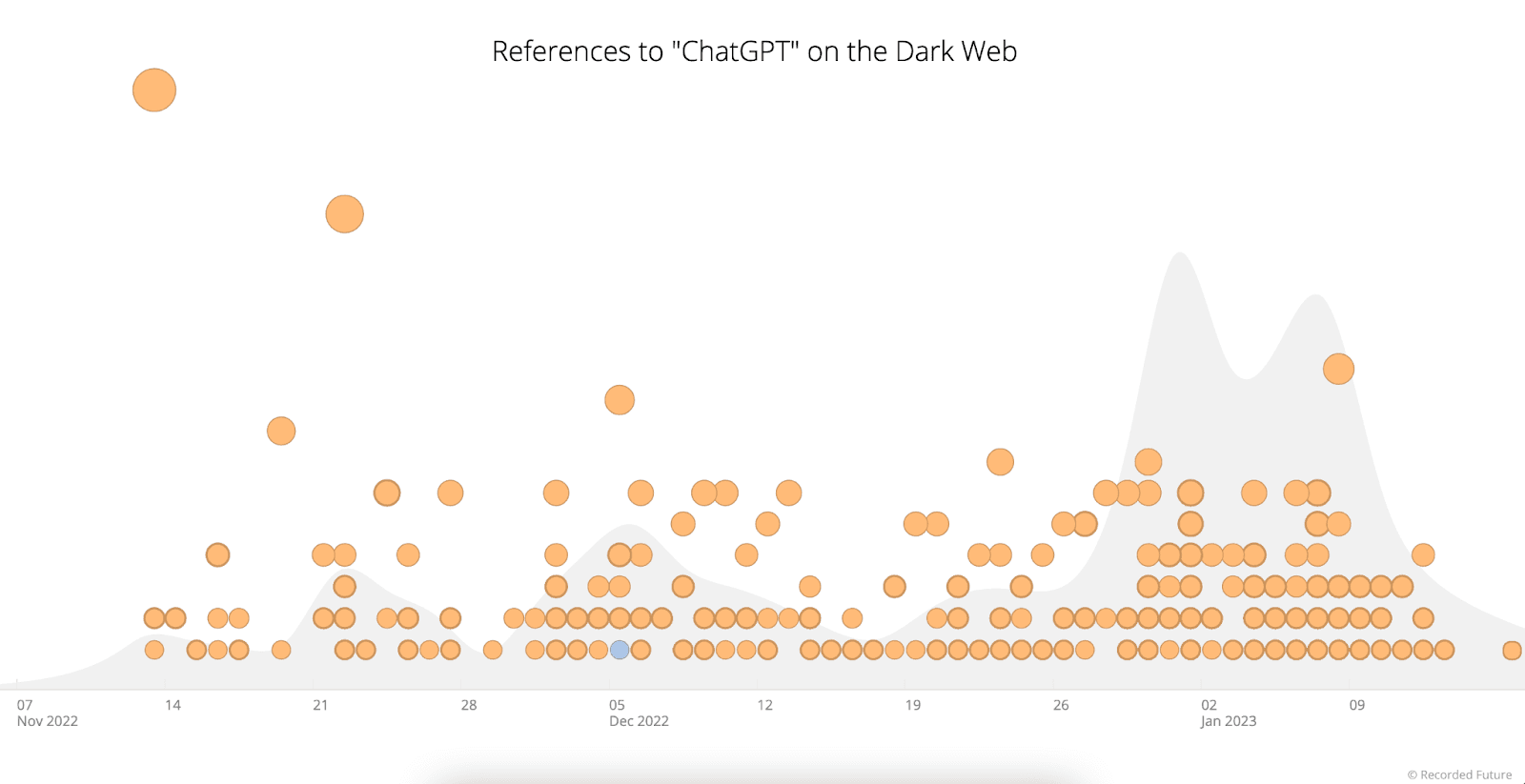

ChatGPT is a chatbot developed by OpenAI, an artificial intelligence (AI) laboratory based in the US, which uses the GPT-3 family of autoregressive (AR) language models. ChatGPT launched on November 30, 2022, and has been subject to widespread attention. Among the potential advantages of ChatGPT, we have also identified several potential use cases ripe for abuse. While ChatGPT has the potential to be abused by nation-state actors to enable cyberespionage, information operations (IOs), and disruptive cyberattacks (“cyberwarfare”), we believe these use cases are not as immediate threats as those posed by cybercriminals. Short term, we believe that non-state actors with limited technical knowledge and resources pose a more serious threat via the malicious use of ChatGPT.

The types of threat actors that we believe currently pose the most threat can be classified as script kiddies, hacktivists, scammers and spammers, payment card fraudsters, and threat actors engaging in other lowly and disreputable forms of cybercrime. Within days of the ChatGPT launch, we identified many threat actors on dark web and special-access forums sharing buggy but functional malware, social engineering tutorials, money-making schemes, and more — all enabled by the use of ChatGPT. While none of these activities have risen to the seriousness of ransomware, data extortion, denial of service, cyberterrorism, and so on, these attack vectors remain possibilities.

This report uses ChatGPT itself, sometimes in its own words, to demonstrate its functionality, rationalize its use and abuse, and replicate threats that currently exist in the cybercriminal underground.

Key Findings

- ChatGPT lowers the barrier to entry for threat actors with limited programming abilities or technical skills. It can produce effective results with just an elementary level of understanding in the fundamentals of cybersecurity and computer science.

- We identified threat actors on dark web and special-access sources sharing proof-of-concept ChatGPT conversations that enable malware development, social engineering, disinformation, phishing, malvertising, and money-making schemes.

- We believe that non-state threat actors pose the most immediate threat to individuals, organizations, and governments via the malicious use of ChatGPT.

- With limited time and experience on the ChatGPT platform, we were able to replicate malicious code identified on dark web and special-access forums.

Background

(The following description was written by ChatGPT and transcribed verbatim in response to the prompt to provide “a detailed response addressing its history and functionality”.)

ChatGPT, or the Generative Pre-training Transformer, is a powerful conversational language model developed by OpenAI. It is based on the GPT architecture and was first released in 2019. Since then, it has undergone several updates, including the release of version 3 in 2020.

The model was trained on a large corpus of text data and is capable of understanding and responding to a wide range of topics and prompts. This makes it highly versatile and well-suited for a variety of natural language processing (NLP) tasks, including question answering, language translation, text summarization, and even creative writing. One of the key strengths of ChatGPT is its ability to generate highly coherent and contextually appropriate text, which is why it is often used in applications such as chatbots, virtual assistants, and automated writing.

The model's large capacity allows it to perform well on NLP tasks with minimal fine-tuning. This makes it an attractive option for developers and researchers looking to build custom NLP models without the need for extensive training data. However, it's important to note that ChatGPT is a unidirectional model, meaning it can only generate text based on the input it has been given. This means that it is not able to answer questions that rely on understanding the context of the conversation or the world.

One of the most common use cases for ChatGPT is in chatbot development. The model's ability to generate human-like text makes it well-suited for creating chatbot conversations that are natural and engaging for users. This can be especially useful for customer service chatbots, which can handle a wide range of customer queries and complaints without the need for human intervention.

Another popular use case for ChatGPT is in automated writing. The model can be fine-tuned to generate articles, stories, and other forms of written content with a high degree of coherence and fluency. This can be useful for content generation in a variety of industries, including journalism, marketing, and publishing.

Additionally, ChatGPT can be used for language translation, which can be highly beneficial for companies and organizations that operate in multiple languages. The model can be fine-tuned to translate text from one language to another with a high degree of accuracy, which can save companies significant time and resources.

In conclusion, ChatGPT is a powerful conversational language model that can be used for a wide range of NLP tasks. Its ability to generate highly coherent and contextually appropriate text makes it well-suited for applications such as chatbots, virtual assistants, and automated writing. Additionally, its large capacity allows it to perform well on NLP tasks with minimal fine-tuning, making it an attractive option for developers and researchers looking to build custom NLP models.

ChatGPT was launched publicly by OpenAI on November 30, 2022. In the following weeks, it garnered widespread media attention for its functionality and its seemingly boundless potential for future use. Researchers, academics, and journalists speculated on use cases. Among the proposed benefits of ChatGPT include the automation of engineering tasks, applications for data science and analytics, software development, educational technology (“edtech”), and much more.

Figure 1: References to ChatGPT on dark web and special-access forums (Source: Recorded Future)

Figure 1: References to ChatGPT on dark web and special-access forums (Source: Recorded Future)

Amid the excitement, optimism, and transhumanist thought-experimenting, some cynicism has been expressed about the use of ChatGPT for deceptive, unethical, or malicious purposes. Some have argued that ChatGPT should be banned in schools due to its potential for enabling plagiarism and systemic cheating on homework, writing assignments, and take-home exams. Others believe that its open registration policy makes it easily accessible for threat actors interested in developing malware, gaining unauthorized access to networks, or coordinating convincing phishing campaigns. These debates raise questions about the authenticity and accuracy of ChatGPT (which is expected to improve over time) and its effects on the job market, remote work and education, freelancing, and more.

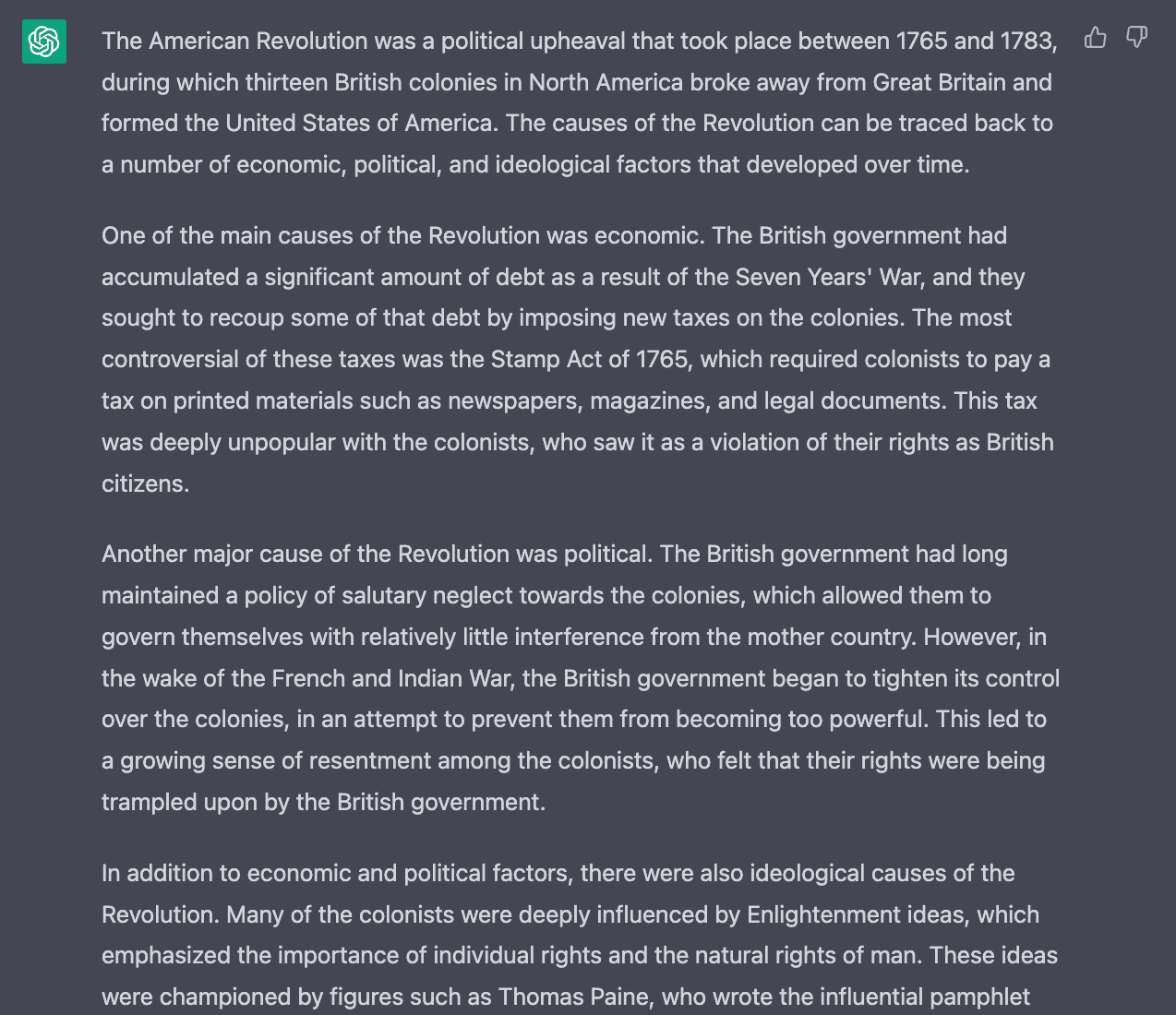

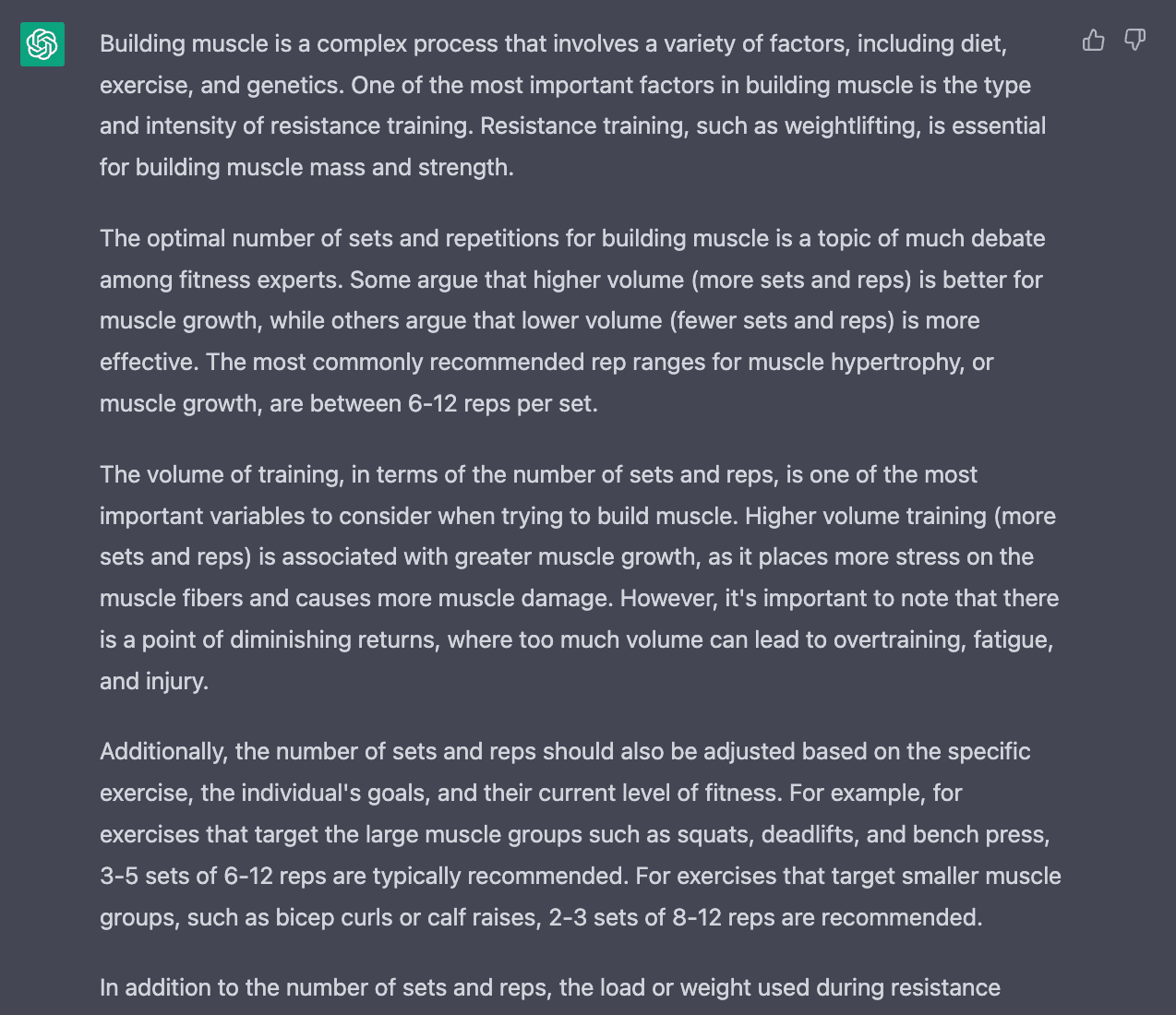

Will ChatGPT cause a widespread market disruption and displace individuals in affected roles? As an experiment, we examined some of the top-rated listings from the most active content creators on freelancing platforms such as Fiverr. We used ChatGPT to replicate these tasks, generating 500 to 1,000-word research papers in seconds, as well as miscellaneous technical tasks.

Figure 2: ChatGPT performing “Writing & Translation” tasks commonly advertised on the Fiverr freelancing platform. The tasks were chosen at random and based on top-rated Fiverr results. Tasks include writing 500-word essays on the causes of the American Revolution (Left) and the optimal number of sets and repetitions for muscular hypertrophy (Right). (Source: Recorded Future, ChatGPT)

Figure 2: ChatGPT performing “Writing & Translation” tasks commonly advertised on the Fiverr freelancing platform. The tasks were chosen at random and based on top-rated Fiverr results. Tasks include writing 500-word essays on the causes of the American Revolution (Left) and the optimal number of sets and repetitions for muscular hypertrophy (Right). (Source: Recorded Future, ChatGPT)

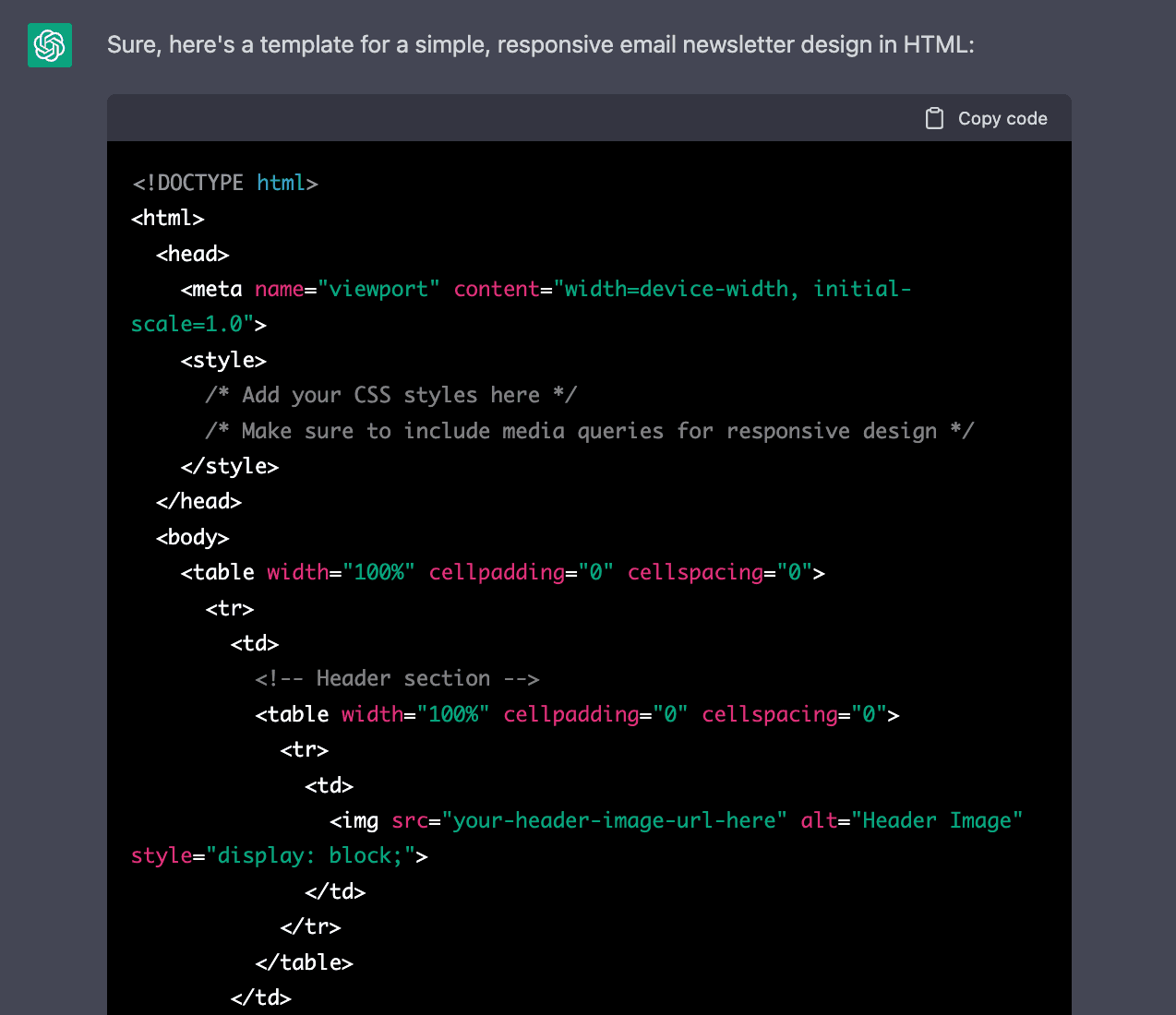

Figure 3: ChatGPT performing “Programming & Tech” tasks commonly advertised on the Fiverr freelancing platform. Tasks were chosen at random and based on top-rated Fiverr results. They include creating an interactive advertisement banner in Javascript for a WordPress website (Left), a template for a responsive email newsletter in HTML (Right). (Source: Recorded Future, ChatGPT)

Figure 3: ChatGPT performing “Programming & Tech” tasks commonly advertised on the Fiverr freelancing platform. Tasks were chosen at random and based on top-rated Fiverr results. They include creating an interactive advertisement banner in Javascript for a WordPress website (Left), a template for a responsive email newsletter in HTML (Right). (Source: Recorded Future, ChatGPT)

Cybercriminals have quickly caught on to this functionality of ChatGPT, monetizing fraudulent freelance work that uses ChatGPT to automate contracted tasks en masse. Threat actors also claim to have written e-books with ChatGPT, which they have allegedly listed for sale under false pen names on popular marketplaces. In some cases, these same threat actors have publicly claimed to make upwards of $4,000 per day abusing ChatGPT to deceive clients and customers. We believe that these earnings claims are possible, but likely an exaggeration intended to direct traffic to the author’s profile.

Cybercriminals have also used ChatGPT to write scripts that automate commands such as dice rolling and strategic betting for online casinos and sports betting platforms, cheat on online videogames, create fraudulent affiliate marketing redirects to earn passive income, and more. Many of these tutorials are openly accessible on forums, messaging platforms, and social media frequented by cybercriminals and require no prior registration to view.

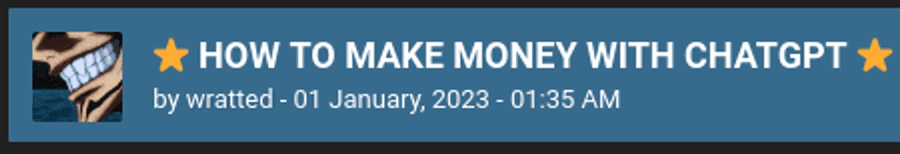

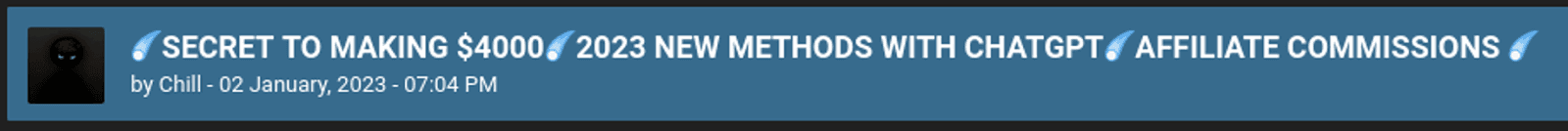

Below is a sample of such advertisements from the low-tier, English-language Cracked Forum, between December 16, 2022, and January 2, 2023:

Figure 4: Cybercriminal advertisements and tutorials on the low-tier Cracked Forum for money-making schemes using ChatGPT. Most schemes involve completing fraudulent tasks on freelancing platforms, auto-rolling on online casinos (Middle, Left), and directing traffic to affiliate marketing links (Bottom). (Source: Cracked Forum)

Figure 4: Cybercriminal advertisements and tutorials on the low-tier Cracked Forum for money-making schemes using ChatGPT. Most schemes involve completing fraudulent tasks on freelancing platforms, auto-rolling on online casinos (Middle, Left), and directing traffic to affiliate marketing links (Bottom). (Source: Cracked Forum)

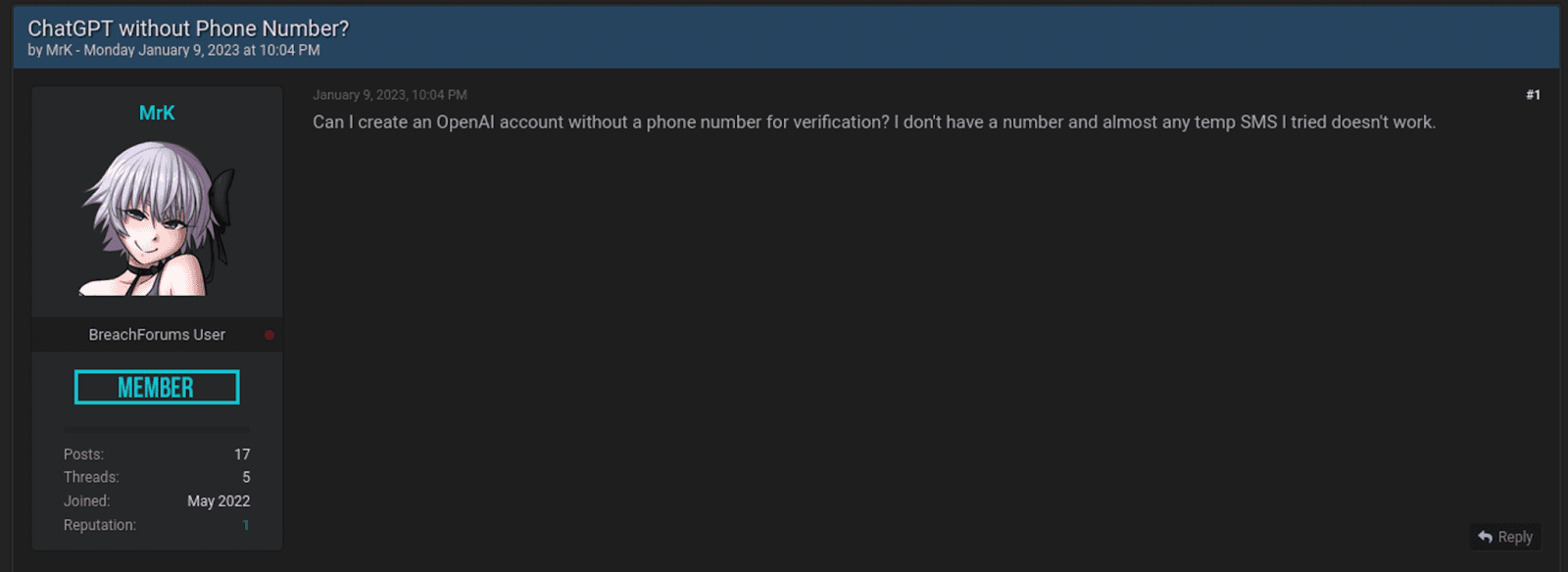

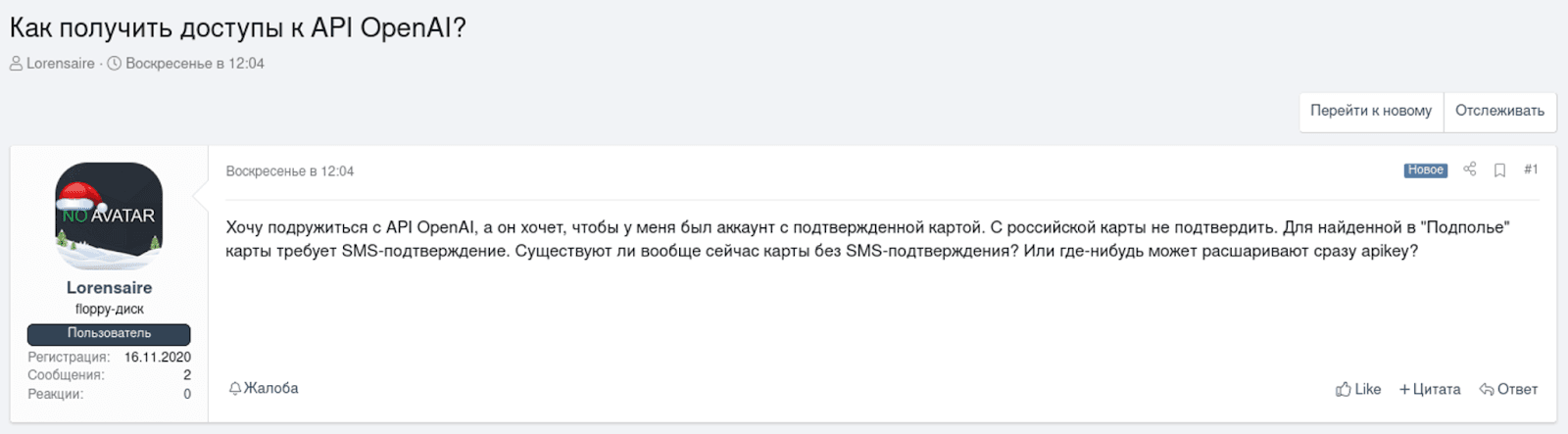

With the rise of ChatGPT in popularity on dark web and special-access forums also comes an influx of threat actors asking to register untraceable, unattributable, or fraudulent accounts with OpenAI that violate the ChatGPT community standards. In some cases, especially for threat actors physically located in the Commonwealth of Independent States (CIS), tutorials for registering accounts with temporary (“burner”) Russian phone numbers have also appeared.

Figure 5: “MrK” inquires about registering an account without a phone number (Source: Recorded Future, BreachForums)

Figure 5: “MrK” inquires about registering an account without a phone number (Source: Recorded Future, BreachForums)

Figure 6: “Lorensaire”, a member of the top-tier Russian-language cybercriminal forum XSS, inquires about acquiring an OpenAI application programming interface (API) key without the use of a verified phone number. The threat actor indicated that their Russian phone number cannot be used for SMS verification. (Source: Recorded Future, XSS).

Figure 6: “Lorensaire”, a member of the top-tier Russian-language cybercriminal forum XSS, inquires about acquiring an OpenAI application programming interface (API) key without the use of a verified phone number. The threat actor indicated that their Russian phone number cannot be used for SMS verification. (Source: Recorded Future, XSS).

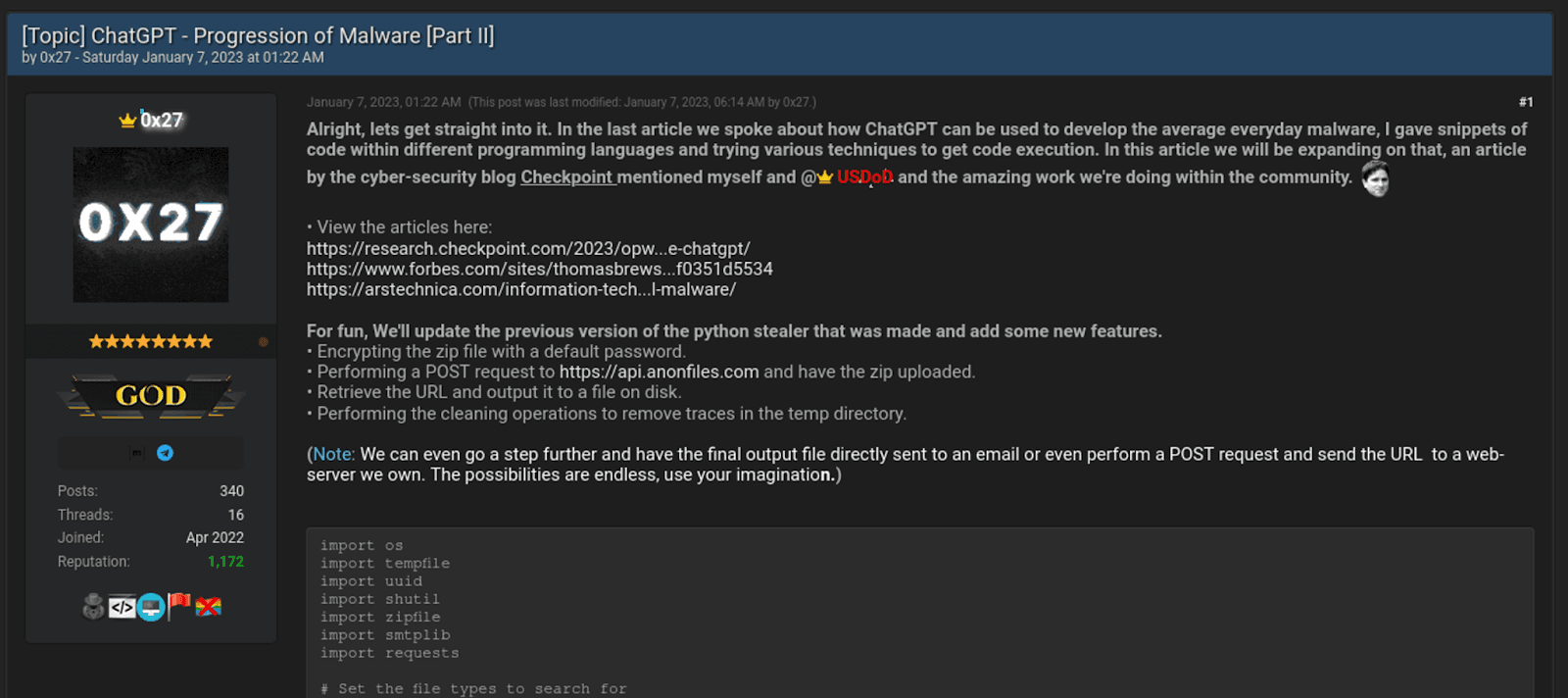

Some threat actors, such as “0x27” and “USDoD” (aka “NetSec”, “Scarfac33”) on the mid-tier BreachForums, have used recent articles on the malicious use of ChatGPT from cybersecurity researchers at Checkpoint, Forbes, and Ars Technica to inflate their personal brand and boost their credibility rating on the forum. These articles, while showing the potential threat of ChatGPT abuse, have also brought attention to the threat actors that are engaging in such activities — to an extent, possibly legitimizing them and solidifying their reputation. While many threat actors are opportunistic and financially motivated, some are ego-driven and inspired by media attention.

Figure 7: The threat actor “0x27” acknowledges reporting on the malicious use of ChatGPT, which includes references to previous threads authored by 0x27 and “USDoD” on BreachForums. (Source: Recorded Future, BreachForums)

Figure 7: The threat actor “0x27” acknowledges reporting on the malicious use of ChatGPT, which includes references to previous threads authored by 0x27 and “USDoD” on BreachForums. (Source: Recorded Future, BreachForums)

Editor’s Note: This is an excerpt of a full report. To read the entire analysis with endnotes, click here to download the report as a PDF.

Related