Beyond Hybrid War: How China Exploits Social Media to Sway American Opinion

Click here to download the complete analysis as a PDF.

Recorded Future analyzed data from several Western social media platforms from October 1, 2018 through February 22, 2019 to determine how the Chinese state exploits social media to influence the American public. This report details those techniques and campaigns using data acquired from Recorded Future® Platform, social media sites, and other OSINT techniques. This report will be of most value to government departments, geopolitical scholars and researchers, and all users of social media.

Executive Summary

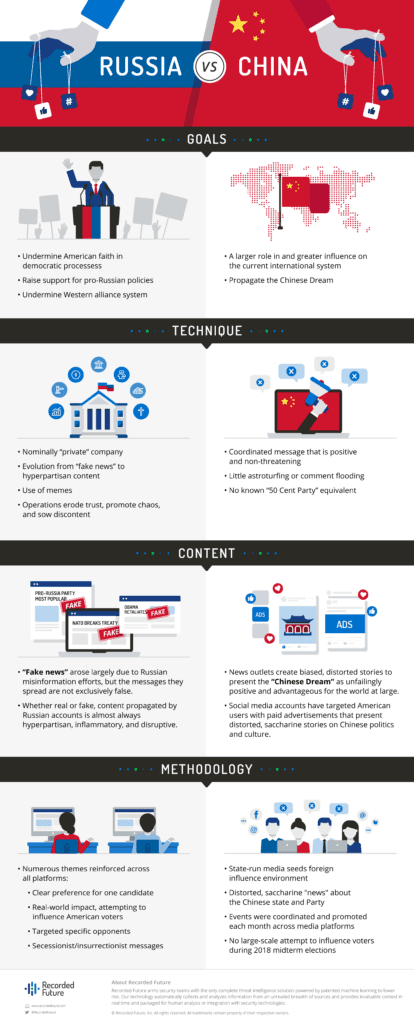

Since the 2016 U.S. presidential election, researchers, reporters, and academics have devoted countless resources to understanding the role that Russian disinformation, or influence operations, played in the outcome of the election. As a result, there exists an implicit assumption that other state-run influence campaigns must look the same and operate in the same manner.

However, our research demonstrates that social media influence campaigns are not a one-size-fits-all technique. We studied Chinese state-run social media influence operations and concluded that the Chinese state utilized techniques different from the Russian state. These differences in technique are driven by dissimilar foreign policy and strategic goals. President Xi Jinping has global strategic goals for China different from those President Vladimir Putin has for Russia; as a result, the social media influence techniques used by China are different from those used by Russia.

Further, our research has revealed that the manner in which China has attempted to influence the American population is different from the techniques they use domestically. We believe that the Chinese state has employed a plethora of state-run media to exploit the openness of American democratic society in an effort to insert an intentionally distorted and biased narrative portraying a utopian view of the Chinese government and party.

Key Judgments

- Chinese English-language social media influence operations are seeded by state-run media, which overwhelmingly present a positive, benign, and cooperative image of China.

- Chinese influence accounts used paid advertisements to target American users with political or nationally important messages and distorted general news about China.

- It is likely that weekly guidance issued by state propaganda authorities drives accounts to propagate positive messages in concert regarding special events once or twice a month.

- We assess that these Chinese state-run influence accounts did not attempt a large-scale campaign to influence American voters in the run-up to the November 6, 2018, midterm elections. However, on a small scale, we observed all of our researched state-run influence accounts disseminating breaking news and biased content surrounding President Trump and China-related issues.

- We believe that Russian social media influence operations are disruptive and destabilizing because those techniques support Russia’s primary strategic goal. Conversely, China’s state-run social media operations are largely positive and coordinated because those techniques support Chinese strategic goals.

Background

In January 2017, the U.S. Intelligence Community published a seminal unclassified assessment on Russian efforts to influence the 2016 U.S. presidential election. One of the key judgments in this assessment concluded:

We assess Russian President Vladimir Putin ordered an influence campaign in 2016 aimed at the US presidential election. Russia’s goals were to undermine public faith in the U.S. democratic process, denigrate Secretary Clinton, and harm her electability and potential presidency. We further assess Putin and the Russian Government developed a clear preference for President-elect Trump.

For most Americans, the influence campaign waged by the Russian state on Western social media platforms in 2016 was the first time they had ever encountered an information operation. Over the ensuing three years, investigations by the Department of Justice, academics, researchers, and others exposed the breadth, depth, and impact of the Russian influence campaign upon the American electorate.

While the experience of being targeted by an influence campaign was new for most Americans, these types of operations have been a critical component of many nations’ military and intelligence capabilities for years. Broadly, information or influence operations are defined by the RAND Corporation as “the collection of tactical information about an adversary as well as the dissemination of propaganda in pursuit of a competitive advantage over an opponent.”

For Russia, influence operations are part of a larger effort called “information confrontation.” According to the Defense Intelligence Agency:

“Information confrontation,” or IPb (informatsionnoye protivoborstvo), is the Russian government’s term for conflict in the information sphere. IPb includes diplomatic, economic, military, political, cultural, social, and religious information arenas, and encompasses two measures for influence: informational-technical effect and informational-psychological effect. Informational-technical effect is roughly analogous to computer network operations, including computer-network defense, attack, and exploitation. Informational-psychological effect refers to attempts to change people’s behavior or beliefs in favor of Russian governmental objectives. IPb is designed to shape perceptions and manipulate the behavior of target audiences. Information countermeasures are activities taken in advance of an event that could be either offensive (such as activities to discredit the key communicator) or defensive (such as measures to secure internet websites) designed to prevent an attack.

In this study of Chinese influence operations, we also wanted to incorporate a term created by French researchers in a seminal 2018 joint research report — “information manipulation.” Information manipulation, as defined by the two French agencies, is “the intentional and massive dissemination of false or biased news for hostile political purposes.”

According to the French researchers, nation-state information manipulation includes three criteria:

- A coordinated campaign

- The diffusion of false information or information that is consciously distorted

- The political intention to cause harm

As the researchers of this paper discuss, it is important — especially in the case of Chinese state-run information manipulation campaigns — to distinguish the political intent and national strategies underlying these campaigns as different from simply another perspective on the news.

We will use both the terms “influence operation” and “information manipulation” in this report and want to be sure the definitions and criteria for each are well articulated for the reader.

Both the Russian and Chinese state-run media assert themselves as simply countering the mainstream English-language media’s narrative and bias against their nations and people. English-language, state-run media for both countries hire fluent, often Western-educated journalists and hosts, and have become effective at leveraging legitimate native English-language journalists and television hosts.

While using English-language state media to seed the message of their information manipulation campaigns, what distinguishes Russian and Chinese approaches are their tactics, strategic goals, and efficacy. In this report, we will focus on the English-language social media aspects of China’s information manipulation and demonstrate how and why China’s campaigns are different from Russia’s.

Research Scope

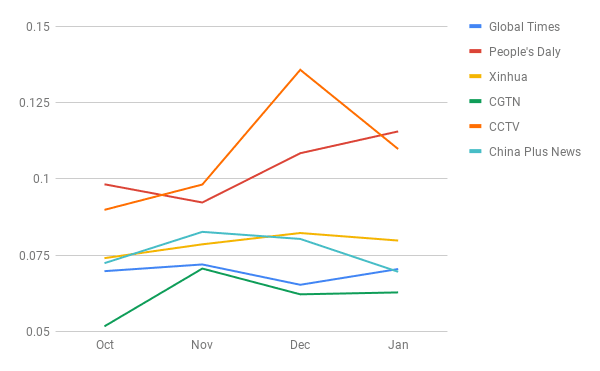

Our research focused on the English-language social media activity of six major state-run propaganda organizations from October 1, 2018 through January 21, 2019, which included over 40,000 posts. We selected these six organizations — Xinhua, People’s Daily, China Global Television (CGTN), China Central Television (CCTV), China Plus News, and the Global Times — because they:

- Are highly digitized

- Possess accounts on multiple English language social media platforms

- Are associated with Chinese intelligence agencies and/or English language propaganda systems

Because our intent was to map out Chinese state-run influence campaigns targeting the American public, we evaluated only English language posts and comments, as the posts in Chinese were unlikely to affect most Americans. Further, our research focused on answering two fundamental questions about Chinese influence operations:

- Does China employ the same influence tactics in the English-language social media space as it does domestically?

- How do Chinese state-run influence operations differ from Russian ones? In what ways are they similar and different, and why?

We searched for patterns of automated dissemination, scrutinized relationships among reposts and top reposting accounts, examined account naming conventions, and profiled post topics and hashtags.

Further, we also included the extensive profiling of and comparisons with Russia’s state-run influence operations. Thus far, Russia’s influence operations have been the most widely studied, and comparisons are necessary both for context and to demonstrate that not all social media influence campaigns are alike. Russia uses a specific set of tools and techniques designed to support Russia’s strategic goals. As we will demonstrate in this report, China’s social media influence campaigns employ different tactics and techniques because China’s strategic goals are different from Russia’s.

Editor’s Note: Due to terms of service restrictions, we are unable to provide details on the full range of social media activities used by the Chinese state in this report. We have listed specific instances where possible.

The Russian Model of Social Media Influence Operations

Russian attempts to leverage English-language social media to undermine faith in democratic processes, support pro-Russian policies or preferred outcomes, and sow division within Western societies have been well documented over the past several years.

Research on Russian information manipulation and social media operations has been critical to identifying the threat and countering the negative impacts on democratic societies. However, the intense focus on Russian campaigns has led to many to assume that because Russia was (arguably) so effective at manipulating social media, other nations must use the same tactics. This is not the case. Russia’s social media operations are unique to Russia’s strategic goals, domestic political and power structures, and the social media landscape of 2015 to 2016.

In terms of its relationship with Western democracies, Russia has a single primary goal under which others fall. This goal is to create a “polycentric” international system where the interests and policy goals of the Russian state are supported and respected. This essentially involves the wholesale reorienting of the current, Western-dominated international system. Regarding Russia’s efforts toward the United States in particular, this means “challenging the resolve of NATO,” manipulating and creating distrust in the U.S. electoral system, and exacerbating disagreements and divisions between the U.S. and the European Union.

This strategy and these goals drive the tactics Russia used in its social media influence operations.

First, the Russian state employed a nominally “private” company, the Internet Research Agency, to run social media influence operations. The Internet Research Agency was funded by Concord Management and Consulting, a company controlled by a man known as “Putin’s chef” (Yevgeniy Prigozhin), who has long-standing ties to Russian government officials. Prigozhin has even been described by some as “among the president’s [Putin’s] closest friends.”

This is a critical distinction to note when comparing state-run influence operations campaigns. In Russia, individuals like Prigozhin, who are close to President Putin but not to government officials, provide the means for the Russian government to engage in social media operations while maintaining distance and deniability for the regime. This is a distinct and distinguishing feature of Russian social media influence campaigns, compared to other nations.

The effective use of “front” companies and organizations (including both Concord Management and the Internet Research Agency) is a distinctive feature of Russian influence operations.

Second, Russian social media influence tactics have evolved over the past three years. As former Internet Research Agency employee Vitaly Bespalov described it:

Writers on the first floor — often former professional journalists like Bespalov — created news articles that referred to blog posts written on the third floor. Workers on the third and fourth floor posted comments on the stories and other sites under fake identities, pretending they were from Ukraine. And the marketing team on the second floor weaved all of this misinformation into social media.

Essentially, in the early days of the Internet Research Agency, writers would be hired to create and propagate “fake news” and content that supported broader Russian strategic messages and goals. Content from Russian state-run media was also widely propagated by Russian English-language social media accounts.

However, Recorded Future’s research into Russian social media operations before, during, and immediately after the 2018 U.S. midterm elections revealed that at least a subset of Russian-attributed accounts has moved away from propagating “fake news.” Instead, these accounts, which can be classified as “right trolls,” propagate and amplify hyperpartisan messages, or sharply polarized perspectives on legitimate news stories.

Our research indicated that these Russian accounts regularly promulgated content by politicians and mainstream U.S. news sources, such as Fox News, MSNBC, and CNBC. They also regularly propagated posts by hyperpartisan sites, such as the Daily Caller, Hannity, and Breitbart. The vast majority of these proliferated stories and posts were based in fact, but presented a hyperpartisan, or sharply polarized, perspective on those facts.

This evolution, even if just among a subset of larger Russian state-directed English-language social media operations, is also a unique technique of Russian operations. From the limited reporting available on Iranian influence operations, in the summer of 2018, Reuters discovered a network of social media accounts that “were part of an Iranian project to covertly influence public opinion in other countries.” The accounts were run by an organization called the International Union of Virtual Media (IUVM), and propagated “content from Iranian state media and other outlets aligned with the government in Tehran across the internet,” and acted as “large-scale amplifier for Iranian state messaging.”

While this evolution and expansive weaponization of many different types of content may be a result of the length of time which the Russian government has been conducting social media influence operations, it is also a distinct feature of Russian operations.

Third, using social media operations to “undermine unity, destabilise democracies, erode trust in democratic institutions,” and sow general popular discontent and discord are also uniquely Russian techniques designed to support uniquely Russian strategic goals. Again, Russia’s strategic goals are rooted in the desire to reorient and disrupt the entire Western-dominated international system. Therefore, we assess that the fact that Russian social media influence operations also seek to destabilize, erode trust, promote chaos, and sow popular discontent completely aligns with this overarching disruptive goal.

We believe Russian social media influence operations are disruptive and destabilizing because that is Russia’s primary strategic goal. National interests and strategy drive social media influence operations in the same way that they drive traditional intelligence, military, and cyber operations. As a result, each nation’s influence operations use different tactics and techniques because the broad strategic goals they are supporting are all different. As we will discuss below, Chinese social media operations are different and use techniques different from Russian ones because China’s strategic goals are different from Russia’s.

The Chinese Domestic Model of Social Media Influence Operations

As documented in several research histories of Chinese state control over the internet, including “Censored: Distraction and Diversion Inside China’s Great Firewall,” and “Contesting Cyberspace in China: Online Expression and Authoritarian Resilience,” since at least the late 1990s, the Chinese state pioneered internet censorship and social media influence operations.

Internet control and surveillance was initially introduced under the guise of the Golden Shield Project (金盾工程), a massive series of legal and technological initiatives meant to improve intelligence assessments and surveillance capabilities of the national police force. Among the techniques developed at the time was a system for blocking and censoring content known as the “Great Firewall.” The term “Great Firewall” was coined in a June 1997 Wired magazine article in which an anonymous Communist Party official stated that the firewall was “designed to keep Chinese cyberspace free of pollutants of all sorts, by the simple means of requiring ISPs [internet service providers] to block access to ‘problem’ sites abroad.” Since this time, the techniques of information control have expanded well beyond the simple blocking employed by early iterations of the Great Firewall. The information-control regime in China has evolved to include a dizzying array of techniques, technologies, and resources:

- Blocking traffic via IP address and domain

- Mobile application bans

- Protocol blocking, specifically Virtual Private Network protocols and applications

- Filtering and blocking keywords in domains (URL filtering)

- Resetting TCP connections

- Packet filtering

- Distributed denial-of-service (DDoS) attacks (the so-called Great Cannon)

- Man-in-the-middle (MiTM) attacks

- Search engine keyword filtering

- Government-paid social media commenters and astroturfers

- Social media account blocking, topic filtering, content censorship

- State-run media monopoly and censorship

- Social Credit System

- Mandatory real-name account registration

This tool set, combined with the now-ubiquitous mass physical surveillance systems, place China at the forefront of integrating information technology, influence operations, surveillance, and censorship in a model referred to by two scholars from MERICS as “IT-based authoritarianism.”

In addition to the constraints imposed by the Great Firewall and content censorship, the Chinese state also employs a series of active disinformation and distortion measures to influence domestic social media users. One of the most widely studied has been the so-called “50 Cent Party.”

The 50 Cent Party is a group of people hired by the Chinese government to “surreptitiously post large numbers of fabricated social media comments, as if they were the genuine opinions of ordinary Chinese people.” The name is derivative of a rumor that these fake commentators were paid 50 Chinese cents per comment (this has been largely disproven).

This fabrication of social media comments and sentiment is largely known by the term “astroturfing.” Among scholars of the Chinese domestic social media environment, there is much disagreement regarding what the goals or objectives of government-paid astroturfers are.

One study by professors at Harvard, Stanford, and UC San Diego, published in April 2017, determined that one in every 178 social media posts are fabricated by the government and that comments and campaigns are focused and directed against specific topics or issues. Additionally, these scholars have assessed that domestic social media influence operations focus primarily on “cheerleading” or presenting or furthering a positive narrative about the Chinese state:

We have also gone another step and inferred that the purpose of 50¢ [50 Cent Party] activity is to (a) to stop arguments (for which distraction is a more effective than counter-arguments) and (b) to divert public attention from actual or potential collective action on the ground ... That the 50¢ party engages in almost no argument of any kind and is instead devoted primarily to cheerleading for the state, symbols of the regime, or the revolutionary history of the Communist Party. We interpret these activities as the regime’s effort at strategic distraction from collective action, grievances, or general negativity, etc.

Conversely, a separate set of scholars at the University of Michigan, who also examined posts from the 50 Cent Party astroturfers, determined that at least one in every six posts on Chinese domestic social media was fabricated by the government. Further, these scholars argued that less than 40 percent of astroturfed comments could be classified as “cheerleading” and that the rest were a combination of vitriol, racism, insults, and rage against events or individuals. They additionally argue that censors and state-sponsored influence campaigns focus much of their resources on “opinion leaders” and users with large numbers of followers as opposed to simply intervening based on content.

The state is consistently focused on the identity and status of the user rather than the specific content of the post. The regime is interested in reducing counter-hegemonic space via targeted efforts to co-opt or repress individuals who are influential voices in the online public sphere.

While this debate continues to evolve in the examination of China’s domestic social media environment, to date, nobody has thoroughly examined whether these same techniques are used by the Chinese government in the foreign language space. Given its extensive history and experience in domestic social media influence operations, the question arises of how the Chinese government uses foreign social media to influence the American public.

China’s Strategic Goals

Similarly to the Russian government, the Chinese Communist Party has sought to influence foreign thoughts and opinions of China for decades. In a paper published by the Hoover Institution in November 2018, over 30 of the West’s preeminent China scholars collaborated on disseminating their findings from a working group on China’s influence operations abroad.

China’s influence activities have moved beyond their traditional United Front focus on diaspora communities to target a far broader range of sectors in Western societies, ranging from think tanks, universities, and media to state, local, and national government institutions. China seeks to promote views sympathetic to the Chinese government, policies, society, and culture; suppress alternative views; and co-opt key American players to support China’s foreign policy goals and economic interests. Normal public diplomacy, such as visitor programs, cultural and educational exchanges, paid media inserts, and government lobbying are accepted methods used by many governments to project soft power. They are legitimate in large measure because they are transparent. But this report details a range of more assertive and opaque “sharp power” activities that China has stepped up within the United States in an increasingly active manner. These exploit the openness of our democratic society to challenge, and sometimes even undermine, core American freedoms, norms, and laws.

The Chinese government has sought to use state and Party resources to influence how Americans view China is not new. What separates the influence operations of 2019 from those of the past 40 years are due to two factors — first, the ubiquity and impact of social media, and second, the expanded intent and scope.

First is the proliferation of social media platforms, the increasingly broad range of services offered, and the ability to engage with (and not just broadcast to) the intended audience. Over the course of the past decade, social media platforms have evolved to play an ever-expanding role in the lives of users. In the United States, Americans get their news equally from social media and news sites, spend more than 11 hours per day on average “listening to, watching, reading, or generally interacting with media,” and express varying levels of trust in the reliability of information on social media. Further, social media companies increasingly offer users a wider array of services, pulling more of the average user’s time and attention to their platforms.

Second, the intent and scope of China’s influence operations has evolved. As the Hoover Institution paper identified, President Xi has expanded and accelerated a set of policies and activities that “seek to redefine China’s place in the world as a global player.” At the same time, these activities seek to undermine traditional American values (like the freedoms of press, assembly, and religion) that Chinese leadership increasingly views as threatening to their own system of authoritarian rule.

Former Australian prime minister and China expert Malcolm Turnbull described these expanded and accelerated influence activities as “covert, coercive, or corrupting,” underscoring the transformation in scope and focus of these operations under President Xi.

At the national policy level, China’s goals in conducting influence operations are driven by strategic goals and objectives. First and foremost, China seeks a larger role in and greater influence on the current international system. While scholars disagree on the extent to which China, under Xi Jinping, wishes to reshape the current post-World War II international system, most argue that China does not wish to supplant the United States as the world’s only hegemon; instead, it seeks to exercise greater control and influence over global governance. As summarized in the 2018 U.S. Department of Defense Annual Report to Congress on China:

China’s leaders increasingly seek to leverage China’s growing economic, diplomatic, and military clout to establish regional preeminence and expand the country’s international influence. “One Belt, One Road,” now renamed the “Belt and Road Initiative” (BRI), is intended to develop strong economic ties with other countries, shape their interests to align with China’s, and deter confrontation or criticism of China’s approach to sensitive issues.

Greater influence within the international system, regional stability under Chinese conditions, development of a more capable military, and reunification with Taiwan all fall under Xi Jinping’s so-called “Chinese dream of national rejuvenation.” The “Chinese dream” is portrayed by Chinese government and media as unquestionably good and positive for the international community, a message that provides the foundation for foreign influence operations. Advocates of the “Chinese dream” urge that a prosperous and strong China is good for the world and does not pose a threat to any other country, because China will never seek hegemony or territorial expansion.

In his closing speech to the 13th National People’s Congress (NPC) in March 2018, President Xi reinforced China’s commitment to “build a community of shared future for mankind, take responsibility in defending world peace and stability, and make contributions to deliver better lives for all people around the globe.”

Even further, Xi articulated the implications of the “Chinese dream” as unfailingly positive and advantageous for the world at large:

China wants to contribute the Chinese wisdom to the global governance and to show the world its determination to work for an equal, open, and peaceful world. Under this principle, China wants to build “a community with a shared future for mankind,"

The contrast in the scope and tone of China’s goals in relation to Russia’s strategic goals is a critical point. China’s message to the world is positive, and argues that China’s rise will be beneficial, cooperative, and constructive for the global community. In comparison, Russia’s strategic goals are more combative, revolutionary, and disruptive — all traits that are characteristic of Russian social media influence operations since 2015.

‘Grabbing the Right to Speak’

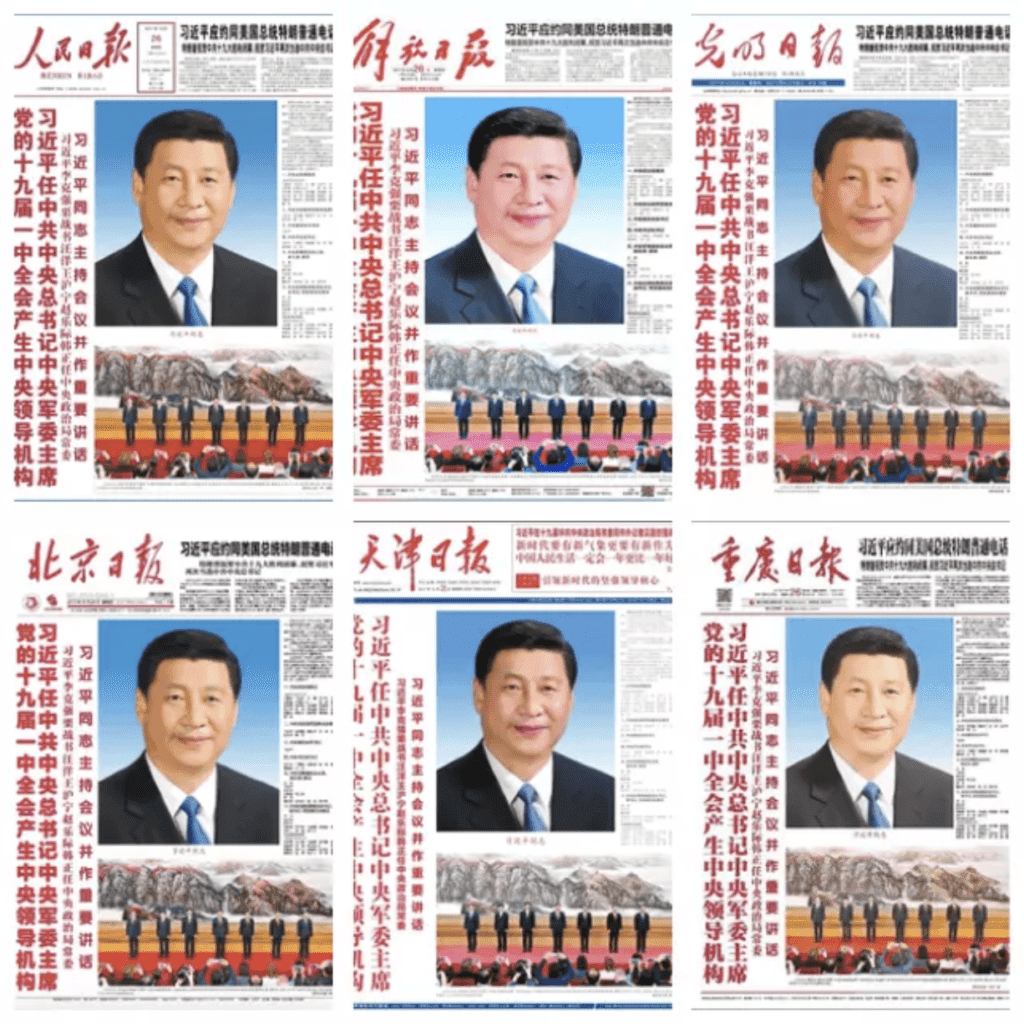

The media environment in China is nearly completely state-owned, controlled, or subservient to the interests of the state. Almost all national and provincial-level news organizations are state-run and monitored, and the Communist Party’s Propaganda Department issues weekly censorship guidelines. It is not unusual for the top newspapers and websites in China to all publish the same exact headline.

Images from the front pages of the People’s Daily, the PLA Daily, the Guangming Daily, the Beijing Daily, the Tianjin Daily, and the Chongqing Daily, as identified by Quartz.

This duplication typically occurs around events of strategic importance to the Communist Party and Chinese leadership, such as the annual meetings of the National People’s Congress (NPC) and the 2018 China-Africa Summit.

Further, research has demonstrated that propaganda, the foundation for Chinese state-run foreign influence operations, can still be highly effective, even if it is perceived as overt. This is for the following five reasons:

- People are poor judges of true versus false information, and they do not necessarily remember that particular information was false.

- Information overload leads people to take shortcuts in determining the trustworthiness of messages.

- Familiar themes or messages can be appealing, even if they are false.

- Statements are more likely to be accepted if backed by evidence, even if that evidence is false.

- Peripheral cues, such as an appearance of objectivity, can increase the credibility of propaganda.

State-run media occupies a specific role in the Party’s efforts to influence foreign opinions of China. The U.S. Department of State described China’s state-run media as the “publicity front” for the Communist Party in its 2017 China Country Report on Human Rights Practices:

The CCP and government continued to maintain ultimate authority over all published, online, and broadcast material. Officially, only state-run media outlets have government approval to cover CCP leaders or other topics deemed “sensitive.” While it did not dictate all content to be published or broadcast, the CCP and the government had unchecked authority to mandate if, when, and how particular issues were reported, or to order that they not be reported at all. In a widely reported 2016 visit to the country’s main media outlets, President Xi told reporters that they were the “publicity front” of the government and the Party and that they must “promote the Party’s will” and “protect the Party’s authority.”

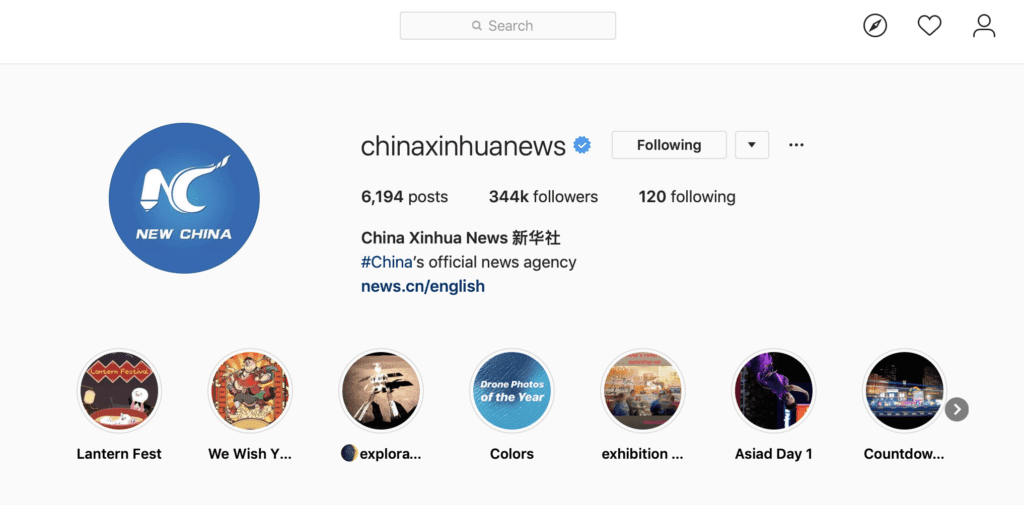

Critical to promoting the Party’s will and protecting its authority are two widely distributed and heavily digitized news services: Xinhua and the People’s Daily. Xinhua News Agency is the self-described “official news agency” of China and has been described by Reporters Without Borders as “the world’s biggest propaganda agency.” Although Xinhua attempts to brand itself as another wire news service, it plays a much different role from that of other wire news services (such as Reuters, Associated Press, and so on) and functions completely at the behest and will of the Party. Even further, Xinhua can be seen as an extension of China’s civilian intelligence service, the Ministry of State Security (MSS):

The PRC's state domestic news agency, Xinhua, posts correspondents overseas and routinely provides the Second Department of the Ministry of State Security personnel with journalistic cover. It provides Chinese leadership with classified reports on domestic and international events and demonstrates many of the characteristics of a regular intelligence agency.

It is not clear the extent to which the MSS guides Xinhua reporting or publishing; however, with the knowledge that overseas correspondents are actually intelligence officers, it is impossible to remove the MSS from a role in Xinhua reporting and messaging overseas.

Xinhua has an extensive English-language site and active presence on several U.S.-based social media platforms. In January 2018, the New York Times conducted an investigation in which it discovered that Xinhua had purchased social media followers and reposts from a “social marketing” company called Devumi. Our research focused primarily on content propagation and reposts, so we were unable to confirm the findings regarding Xinhua’s followers. However, over the course of our research on top reposters and propagators, we noted that many of the top reposter accounts mimicked the bot setup and techniques used by Devumi. These techniques are easy to replicate, and while we assess that the top 20 Xinhua reposters are either broadcast or spam bots, we were unable to determine the ownership of the accounts.

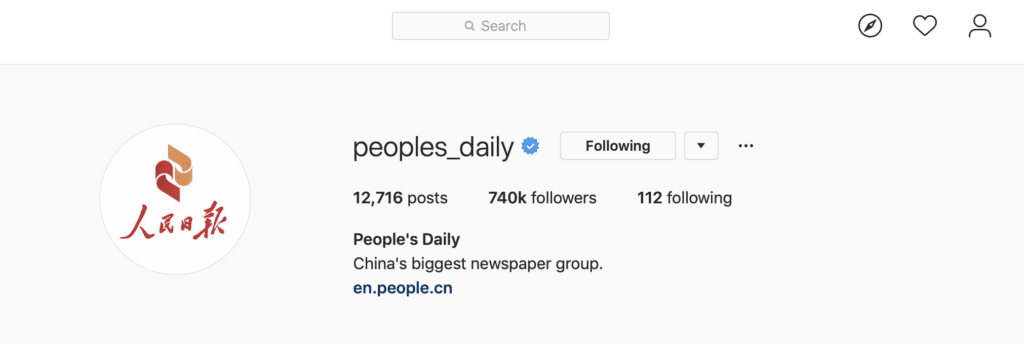

The People’s Daily is part of a collection of papers and websites that identifies itself as “China’s largest newspaper group.” Similar to Xinhua, the People’s Daily is used by both the MSS and China’s military intelligence department (formerly known as the 2PLA) as cover for sending intelligence agents abroad and presents itself as offering a benign, Chinese perspective on global news. The People’s Daily also operates an English-language news site and operates regularly on several U.S.-based social media platforms.

It is important to note that while the intelligence services have not traditionally held a prominent role in influence operations, they are, as Peter Mattis noted, one of “multiple professional systems operating in parallel” within China to achieve national-level goals and objectives.

The intelligence system, and the MSS in particular, has a role in shaping and influencing Western perceptions of and policies on China, just as the state-run media, United Front Work Department, and propaganda systems all do. While the strategic objectives are determined, prioritized, and disseminated from Xi Jinping down, each system and ministry uses its own tools and resources to achieve those goals. Some of these resources and tools overlap, compete with each other, and even degrade the effectiveness of those leveraged by other ministries and systems. The sphere of influence operations is no different. Each system has similar and dissimilar tools, but the same objectives.

The Chinese Model of English-Language Social Media Influence Operations

In late 2018 and early 2019, we studied all of the accessible English-language social media posts from accounts run by Xinhua, the People’s Daily, and four other Chinese state-run media organizations geared toward a foreign audience on Western social media platforms. Our research indicates that China has taken a vastly different approach to influencing foreign audiences from its approach in the domestic social media space. While the seed material for the influence campaigns is the same state-run media content, there is likely no English-language equivalent to the 50 Cent Party or army of social media commenters.

Chinese state-run accounts are active social media users. On average, over the date range we studied, state-run accounts posted 60 to 100 times per day across several Western platforms. Xinhua, CGTN, and the Global Times were the most active content generators on these social media platforms, and posts by the People’s Daily, Xinhua, and CGTN were favorited or liked at the highest rates.

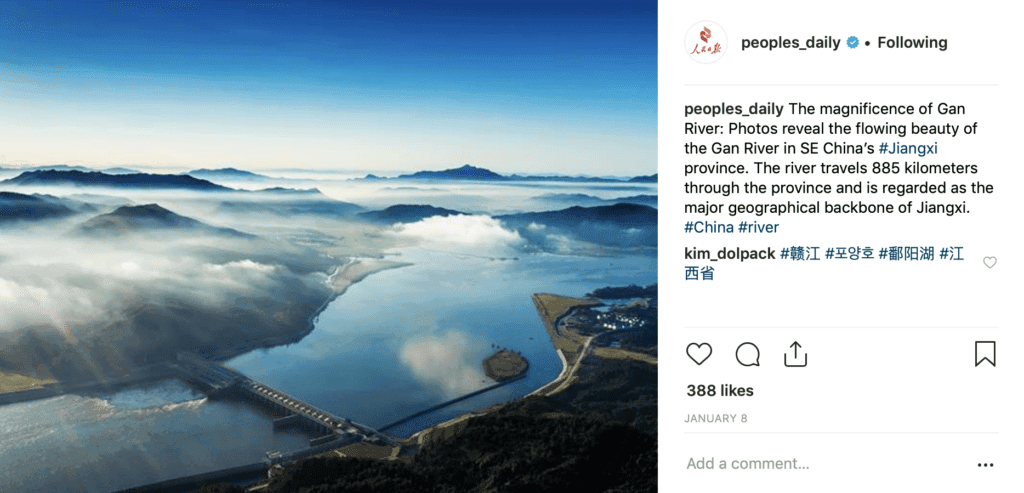

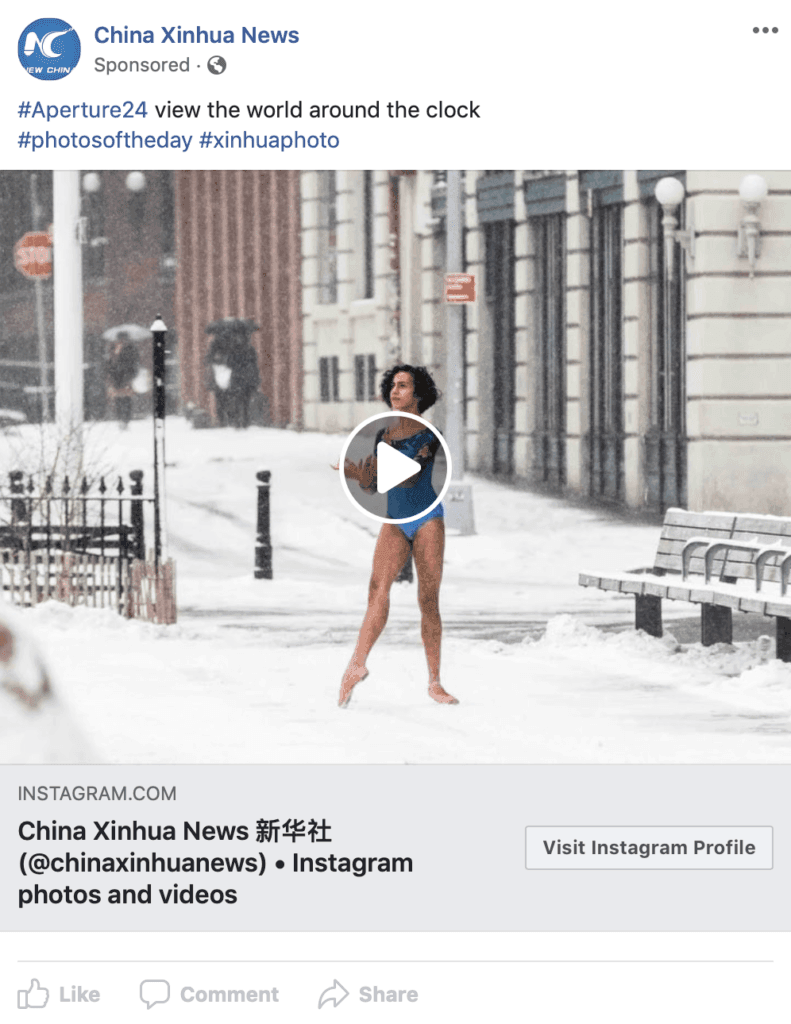

Case Study: Instagram

Examining how Xinhua and the People’s Daily utilize Instagram is good example of how the Chinese state exploits Western social media. Both Xinhua and the People’s Daily have verified accounts on Instagram and are regular users. On average, over this date range, both accounts posted around 26 times per day.

Instagram page for Xinhua.

Instagram page for the People’s Daily.

Both accounts have a large number of followers and follow few other accounts. The posts — photographs and videos — are overwhelmingly positive and present any number of variations on a few core themes:

- China’s vast natural beauty

- Appealing cultural traditions and heritage

- Overseas visits by Chinese leaders or visits of foreign leaders to China

- The positive impact China is having on the world in science, technology, sports, etc.

- Breaking global news

Instagram post from the People’s Daily.

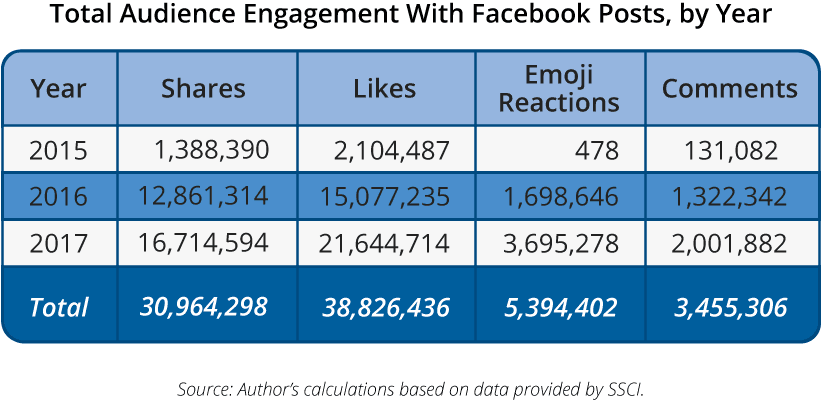

In terms of audience engagement, a metric used to assess the impact of social media, these two Chinese accounts are useful to compare to Russian IRA-linked accounts over the past several years, laid out in the table below.

Modified table from the University of Oxford’s December 2018 report analyzing audience engagement with IRA-linked Facebook posts.

Over nearly four months of Instagram data that we used for this research, Xinhua and People’s Daily posted a combined 6,072 posts, with likes totaling 5,431,009, and 17,039 comments. If we assume that these numbers stayed relatively consistent over the course of 2018, then the total likes for these two accounts would amount to roughly 16,293,027, and the comments would add up to 51,117.

Facebook and Instagram are two different platforms, specializing in communication in two different mediums, but the numbers are still useful for comparing the techniques utilized and the efficacy of state-directed influence operations. Comments are leveraged more widely by users on Facebook, and that platform has two additional means of propagating content (shares and emoji reactions) that Instagram does not.

To compare Instagram usage by both Chinese and Russian state-supported influence campaigns, we used data analysis published in the New Knowledge disinformation report from December 2018. To approximate the Russia numbers for a standard four-month period, we divided the total numbers into segments to get a rough average for comparison. Again, as we do not have access to the actual data used by New Knowledge, this table below is intended to represent a rough comparison to estimate efficacy. Further, we have chosen to profile only two of the most prolific Chinese state-run accounts, and not the entire suite of accounts leveraged for influence operations.

The below is an estimation of audience engagement from Russian and Chinese state-run influence campaigns on Instagram.

| Russia | China | |

|---|---|---|

| Average Number of Posts Over a 114-Day Time Frame | 20,194 | 6,072 |

| Number of Likes | 31,844,639 | 5,431,009 |

| Average Likes per Post | 1,568 | 1,360 |

| Comments | 698,203 | 17,039 |

| Comments per Post | 34 | 3 |

| Total Followers | 3,391,116 | 1,084,000 |

| Total Engagements | 32,542,842 | 5,448,048 |

These two Chinese influence profiles reached a level of audience engagement roughly one-sixth as large as the entire Russian IRA-associated campaign targeting the United States on Instagram.

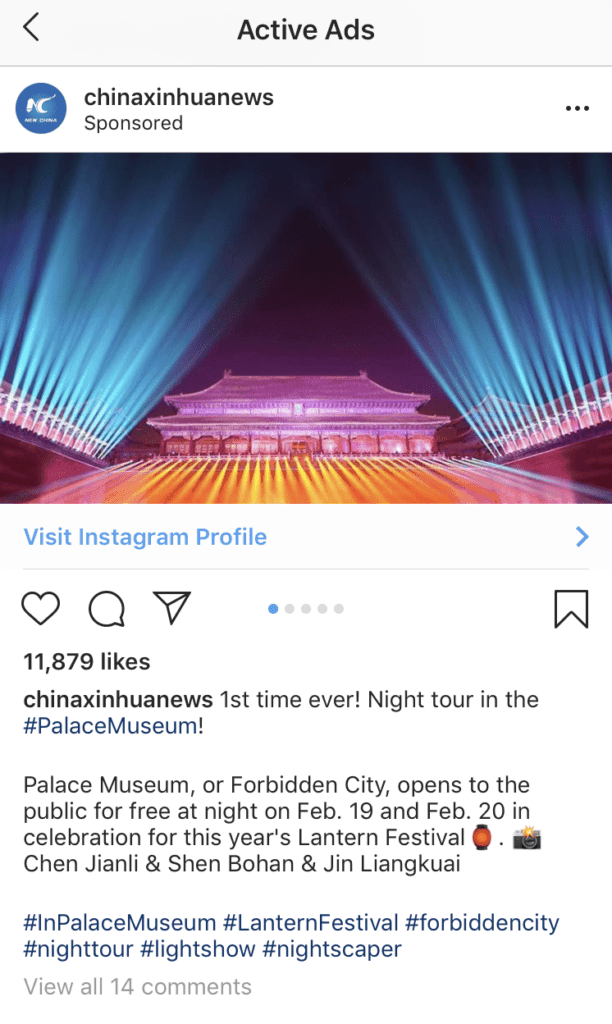

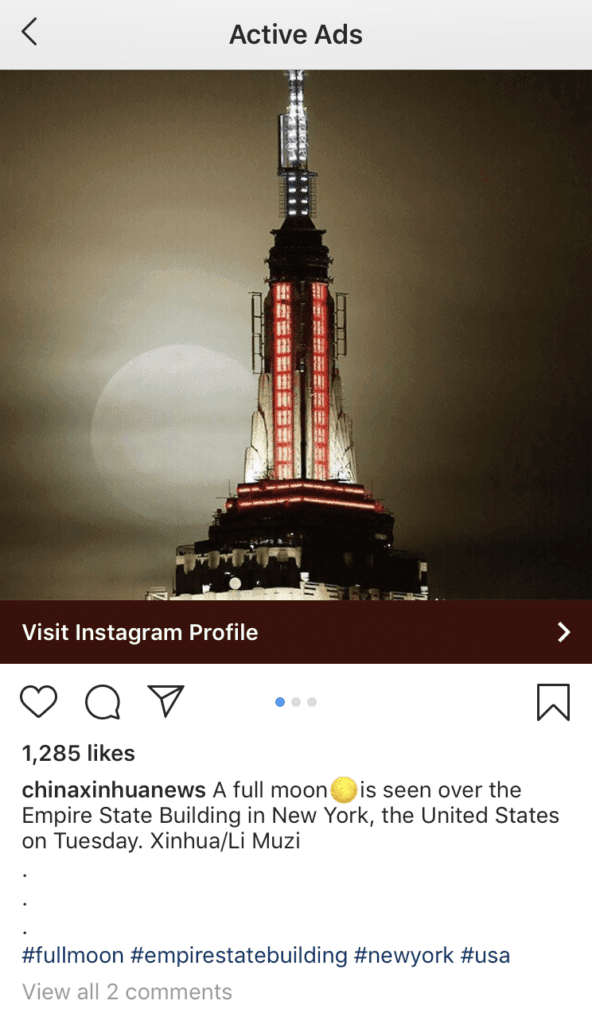

Further, Xinhua also leveraged paid advertisements to promote specific posts during this time frame. The Instagram ad tracker does not allow a user to view all advertisements paid for by a specific account, so the total number of Instagram advertisements used by Chinese influence accounts is unknown at this time. However, the active advertisements we were able to identify followed the core themes identified above and further promoted the image of Xinhua as a wire news service, and not state-run propaganda.

Paid advertisements on Instagram from Xinhua. (Source: Instagram)

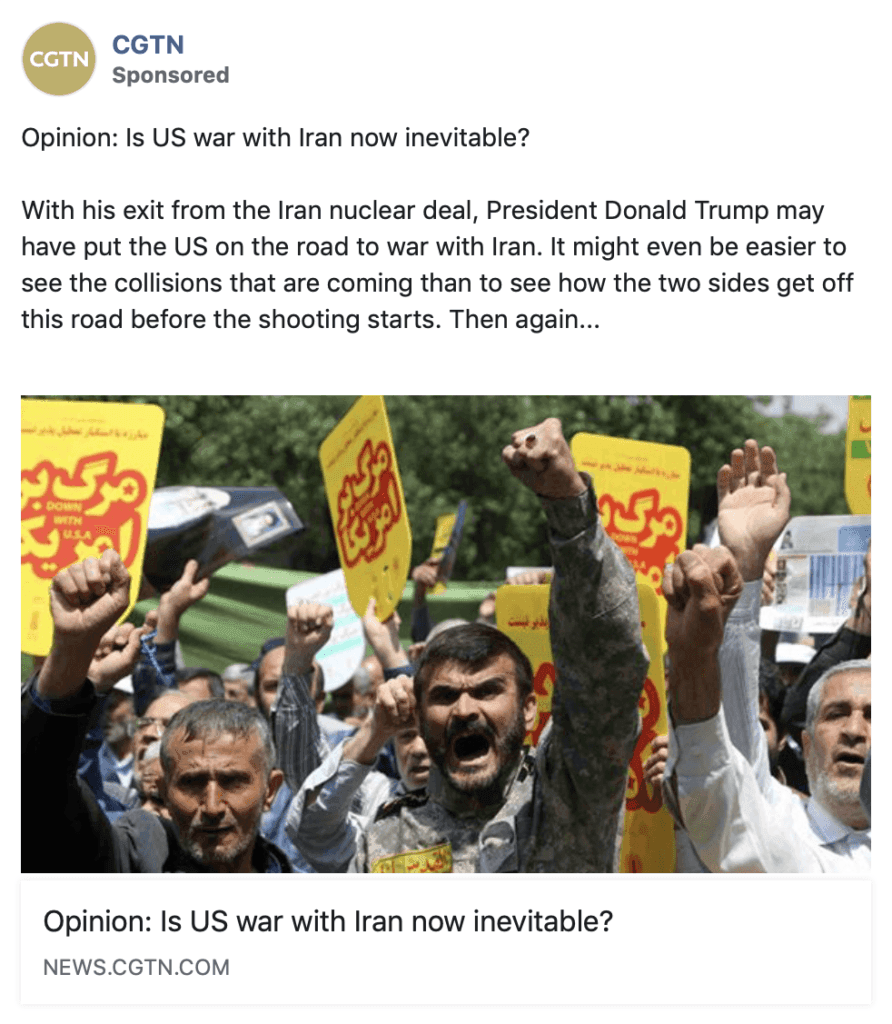

Use of Paid Advertisements

Throughout our research time frame, we also observed the use of paid advertisements across multiple platforms. Depending on the platform, paid advertisements are indicated to users via a “sponsored,” “promoted,” or similar notation within the post itself. Each platform uses a unique algorithm to place advertisements within the feeds of its users, based on the individual user’s preferences, likes, dislikes, and more.

Most platforms provide a mechanism for both identifying whether a post is a paid advertisement and allowing a user to research the ads purchased by specific accounts. Advertisements are not searchable ad infinitum, and the length of time a particular platform retains the data accessible to users varies. These limitations constrained our ability to quantify which percentage of posts on social media published by Chinese state-run influence accounts were paid advertisements versus organic posts.

In terms of broad, cross-platform trends, we observed Xinhua, People’s Daily, CGTN, and China Daily running paid advertisements. Consistent with this story from the Digital Forensics Research Lab, we observed overtly political advertisements from Chinese state-run influence accounts across a number of platforms. Many of these paid advertisements were identified and retained by Facebook as part of their archive of “ads related to politics or issues of national importance,” launched on May 7, 2018. For Facebook, advertisements of this kind are required to be authorized and reviewed, but also to carry a specific “paid for by” disclaimer in addition to the notation indicating the post is a paid advertisement.

However, none of the advertisements run by Xinhua or China Daily on Facebook that were retained as part of the “political” archive over our research period were annotated in the Facebook platform as “paid for by” at the time they were run. Therefore, users viewing the posts during the period in which they were active would not have known that the advertisements were deemed overtly “political” or of national importance, or even that they were ultimately purchased by the Chinese state.

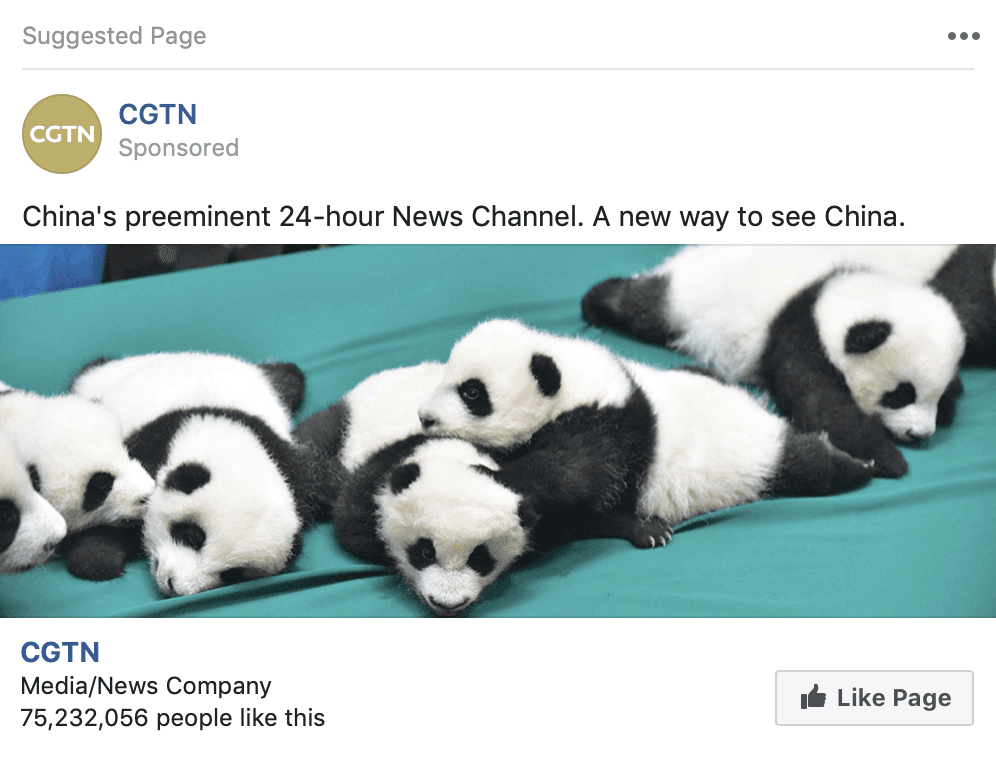

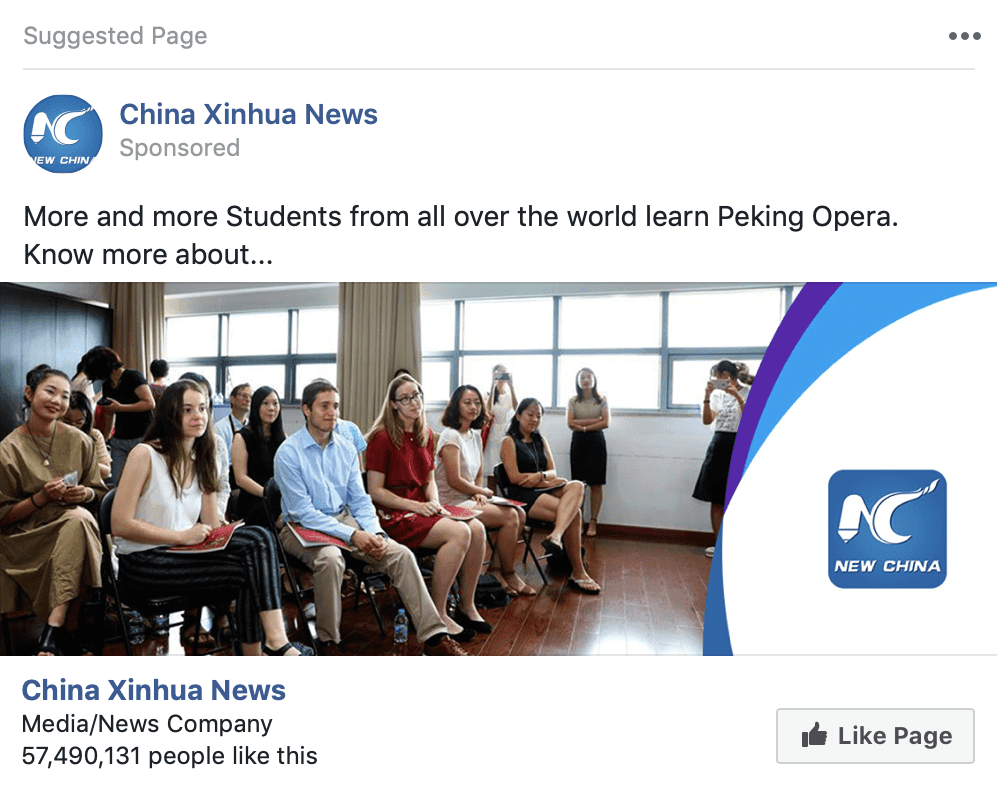

Three examples of paid advertisements later identified by Facebook as “political” or of “national importance.”

From our data set, the advertisements that were retained by Facebook as part of its political archive were substantively different from other advertisements purchased during that same time frame and not archived or tagged. The majority of paid advertisements from these state-run accounts appealed to users to like or follow the account for access to global and China-specific news.

Three examples of paid advertisements not identified by Facebook as “political” or of “national importance.”

Paid advertisements across other platforms demonstrated a similar distribution of “political” and general interest posts. However, other platforms did not provide tools to distinguish paid posts that were “political” or of “national interest” from more general posts, or illuminate who ultimately purchased the advertisement.

Sentiment Analysis

As detailed in our section on China’s strategic goals, China seeks to convince the world that its development and rise is unfailingly positive, beneficial, cooperative, and constructive for the global community. While sentiment analysis can be inexact and possesses a mixed track record, it is most useful on large data sets like this one. We used the VADER sentiment analysis technique and code from their Github repository. Typical scoring thresholds used in sentiment analysis are:

- Positive: Greater than 0.05

- Neutral: From -0.05 to 0.05

- Negative: Less than -0.05

Sentiment score of Chinese influence accounts posts from October 2018 through January 2019.

On average, Chinese state-run influence accounts projected positive sentiment to platform users, which is consistent with the strategic goal of portraying China’s development and rise as positive and beneficial for the global community.

Social Media Messages

We also analyzed the content and hashtags associated with state-run influence accounts. Again, across social media platforms, the content and messages propagated were overwhelmingly positive. Top hashtags and specific content varied by account, month, and media outlet; however, hashtags for Chinese senior leaders (such as Xi Jinping and Li Keqiang) remained among the most popular for all accounts over the entire research duration. This included hashtags for official travel and state visits (such as #Xiplomacy and #XiVisit).

Additionally, each month, a particular event was highlighted universally across the social media accounts we analyzed.

| Month | Event |

|---|---|

| October | CIIE, China International Import Expo in Shanghai |

| November | G20, Group of 20 2018 Summit in Argentina |

| December | 40th Anniversary of China’s Reform and Opening Up |

| January | Chinese Spring Festival |

Each account typically presented its own “take” or message depending on the specialization of the media outlet. For example, the People’s Daily propagated a greater percentage of apolitical, human interest, positive China stories (using hashtags such as #heartwarmingmoments and #AmazingChina) than other outlets, while Xinhua propagated a higher percentage of breaking news stories.

While the messages that these accounts propagated align strategically to China’s articulated goals, they also demonstrated a responsiveness to international events. In particular, the December 2018 arrest of Huawei Chief Financial Officer Meng Wanzhou in Canada. For the prior two months, Huawei was mentioned minimally in social media posts — only by the Global Times and China Plus News. Beginning in December, Huawei became a top topic of influence messaging for all accounts, a trend which continued to the end of the study’s time frame.

This alignment of highlighted events and topics is likely a result of the regular rules issued by state propaganda authorities each week to both domestic and foreign outlets.

2018 US Midterm Elections

We assess that these Chinese state-run influence accounts did not attempt a large-scale campaign to influence American voters in the run-up to the November 6, 2018 midterm elections. However, on a small scale, we observed engagement by all of the state-run influence accounts in disseminating breaking news and biased content surrounding President Trump and China-related issues.

The most active account, in terms of opinionated or biased election-related content, was the Global Times, which has long had a reputation for “aggressive editorials” that “excoriat[e] any country or foreign politician whom China has an issue with.” On November 6, the Global Times propagated an article that referred to President Trump as “unstable” and his policies as “volatile and erratic.” The article closed with a refrain that has become familiar across Chinese state-run media since the advent of the trade war:

If the trade war drags on, they will definitely feel pain in the coming years. At that point, China may become a matter of voters' concern. No one can predict who they will vote for, but one thing is for sure: If the worst-case scenario in their economic relations comes about, there will be no winner from the two sides of the Pacific Ocean.

Based on our research, what made this Chinese-propagated content different from Russian election influence attempts was threefold:

- The scale of this content and its dissemination was very limited. Most of these posts were not widely reposted, favorited, or liked, and the actual impact was likely minimal on American voters.

- Chinese content largely did not express a preference for one candidate or party over another. Aside from the comments about President Trump, which have been widely disseminated in Chinese media since 2017, the articles expressed concern or perspectives in relation to issues that China was concerned with, such as the trade war.

- The propagated elections-related content was primarily generated by the Global Times and Xinhua. Using state-run media as seed data is in contrast to the Russian technique during the 2018 midterms of disseminating hyperpartisan perspectives on legitimate news stories from domestic American news sites.

Outlook

Our research demonstrates that social media influence campaigns are not a one-size-fits-all technique. The Russian state has used a broadly negative, combative, destabilizing, and discordant influence operation because that type of campaign supports Russia’s strategic goals to undermine faith in democratic processes, support pro-Russian policies or preferred outcomes, and sow division within Western societies. Russia’s strategic goals require covert actions and are inherently disruptive, therefore, the social media influence techniques employed are secretive and disruptive as well.

The Chinese state has a starkly different set of strategic goals, and as a result, Chinese state-run social media influence operations use different techniques. Xi Jinping has chosen to support China’s goal to exert greater influence on the current international system by portraying the government in a positive light, arguing that China’s rise will be beneficial, cooperative, and constructive for the global community. This goal requires a coordinated global message and technique, which presents a strong, confident, and optimistic China.

Our research has revealed that the manner in which China has attempted to influence the American population is different from the techniques they use domestically. While researchers have demonstrated that China does want to present a positive image of the state and Communist Party domestically, the techniques of censorship, filtering, astroturfing, and comment flooding are not viable abroad. We discovered no English language equivalent to the 50 Cent Party in Western social media. This is not a conclusion that China’s policies, messages, propaganda, and media do not have social media defenders.

Instead, we believe that the Chinese state has employed a plethora of state-run media to exploit the openness of American democratic society and insert an intentionally distorted and biased narrative “for hostile political purposes.” As expertly explained in the Hoover Institution paper, these influence operations are not benign in nature, but support China’s goals to “redefine its place in the world as a global player” by “exploiting America’s openness in order to advance its aims on a competitive playing field that is hardly level.” China uses the openness of American society to propagate a distorted and utopian view of its government and party.

Over the long term, scholars believe that current Chinese leadership actually view core American values and freedoms, such as those of press, assembly, and religion, as “direct challenges to its own form of one-party rule.” It is therefore imperative that we not be complacent when confronting Chinese information manipulation on social media. Identifying the goals and techniques of these influence operations is the first step toward countering their deleterious effects.

At this point, it is valuable to revisit why influence operations and propaganda can be so persuasive, and to use this research to counter those arguments. Again, according to research from RAND, propaganda (and resulting influence campaigns) are effective for the following five reasons:

- People are poor judges of true versus false information, and they do not necessarily remember that particular information was false.

- Information overload leads people to take shortcuts in determining the trustworthiness of messages.

- Familiar themes or messages can be appealing, even if they are false.

- Statements are more likely to be accepted if backed by evidence, even if that evidence is false.

- Peripheral cues, such as an appearance of objectivity, can increase the credibility of propaganda.

For those who use social media, knowledge is the greatest tool in combating influence operations. Social media users bear a greater responsibility to themselves and the American public to develop better means of detecting and dismissing influence attempts. Corporate, public, and private users should use both this research and other research on state-run influence operations to refine those means and tools to counter the exploitation of our open society and values by foreign governments.

Related