Putting Usability First: How to Design a Great User Experience

Inspired by companies like SAP, Visma, IBM, and Salesforce talking about their user interface and usability work, here’s an inside look at how the Recorded Future product design team works to build great user experiences and achieve usability goals through usability testing, usage metrics analysis, and user research.

Before getting into it, let’s start with some definitions.

Usability Equals Usefulness

Usability is part of the broader term “user experience” (UX) and refers to the ease of access and use of a product or website. Deciding if a design has high usability (or more bluntly, whether a design is usable or unusable) requires considering its features together with the context of the user — who they are and what they’re trying to accomplish — within their context of use.

The official ISO 9241-11 definition of usability is: “The extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use.”

At Recorded Future, we have shortened this in everyday talk to “usability equals usefulness,” meaning that we measure usability by the extent to which our clients are able to find value in our platform specific to their use cases.

Team Structure and Key Objectives

The product design team consists of a diverse group of highly motivated individuals with many years of combined experience working in B2B and B2C companies, at all stages and in different industries. The team (based in Gothenburg, Sweden) is part of the R&D organization and works closely with the Boston-based product management team.

Understanding how well our designers’ intentions actually meet users’ expectations has always been key at Recorded Future. Therefore, we have conducted usability tests and usage data analysis from the very start. The product design team is responsible for driving usability from a strategic and tactical perspective, for conducting tests and analysis, and for owning the key results and outcome. Some of the core metrics we use to track usability include, but aren’t limited to:

- Daily Active Users/Monthly Active Users (DAU/MAU): There are many ways to measure engagement and stickiness in a product. We have chosen to make daily active users over monthly active users a key metric for a few reasons. First and foremost, any number of things can drive a person to a product, but if the product doesn’t deliver, it will immediately show in this metric. As a company with tremendous growth, having a high portion of new users in the portal on a monthly basis helps us put extra focus on getting them engaged immediately to maintain and grow this metric. This way (traditionally for SaaS products), mid- and long-term users are more engaged than new ones. Lastly, this metric is a terrific one to benchmark how we’re doing against the industry. Some good sources can be found in the Mixpanel Product Benchmarks Report from 2017, summarized here.

- Effectiveness, Efficiency, and Satisfaction for Core Use Cases: To quantify the usability of our portal so that we can measure improvements over time, we conduct supervised scripted tests for a number of core use cases in our portal on a regular basis. The tests are conducted with real users and in person. The test measures completion rate, number of errors, time to completion, and how enjoyable the task was to the client.

- Net Promoter Score (NPS): An NPS is a highly debated metric — some argue that it is even dangerous to pay too much attention to it as the science behind it hides product improvements quite effectively. For example, according to NPS, a score of six is the same as a score of zero. Considering how the NPS number is calculated, what this means is that if you managed to move your users from a zero to a six with product improvements, it wouldn’t show in the NPS — it would still be minus 100. For this reason, we have always used NPS in combination with other metrics — metrics that better show what this one number doesn’t. We’ll soon publish an update to the last blog post on our NPS score.

Usability Testing at Recorded Future

As there are plenty of different methods for conducting usability testing, we usually come back to the following four commonly known and used methods:

- Concurrent think aloud (in-person test)

- Remote, unmoderated tests

- Remote, moderated tests

- Supervised scripted tests to collect quantifiable data over time (referenced in previous section)

Pictures of team activities from 2018.

Why We Invest in Usability Testing

User testing is a great method to cut development costs, for a number of different reasons. Here are the top three reasons for our organization:

- Changing a wireframe or prototype costs a multitude less than changing production code. Not only because it’s production code, but also because you have users who have now started learning how to use your feature. Usability testing during the design phase can identify issues beforehand.

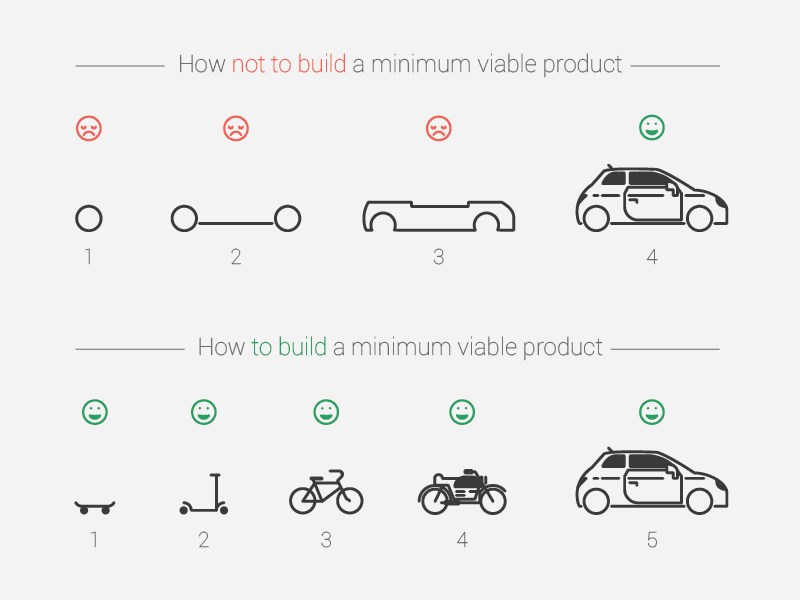

- An agile approach to software developments always means launching a minimal viable product (MVP) versus a slice of a full-scale version — or even worse, the whole shebang. Usability testing of a minimal viable product can quickly identify if it does in fact generate value and in what way, before moving on to a second version of the same.

- The data speaks for itself. It’s very easy to have assumptions on how a feature will be used, by who, and why. If there is any disagreement in an organization, it can cause tension and waste time. Usability testing done right generates clear data that trumps assumptions and can help create common ground.

Source: Henrik Kniberg

Usability Testing Versus User Testing

A UX professional who reads this subheader may become wary, as the terms describing UX methods are constantly debatable. User testing never refers to actually testing a user per se, as a user cannot do or say anything wrong. User testing in this context refers to market validation and idea validation, versus usability testing, which actually tests a solution.

User testing can also be called user research, and it’s the art of understanding a user’s (or potential user’s) pains and desires, through methods like interviews, surveys and focus groups. Our most common method is user interviews, where we reach out to clients who match the persona we are interested in understanding more about. The interviews are conducted either in person or by video, depending on what is most convenient. We usually spend an hour with the client, asking open-ended questions to understand their work situation, their pains, and what makes them thrive.

As Henry Ford said, “If I had asked people what they wanted, they would have said faster horses.” At Recorded Future, it is, always has been, and always will be our goal to let our users drive our UX design process in a significant way.

Usage Data Analytics

User testing, interviews, usability testing, and feedback in different shapes and forms from users all tell a big part of the story, but it is ever so important not to forget to look at what users are actually doing when interacting with your product when no one is watching. Analysis of usage data is core to our UX process. Usage data tells us a lot about where users are finding the most value by correlating it to retention and engagement. The analysis allows us to improve workflows for our different user segments, making sure that users find value as quickly as possible.

It’s worth mentioning that all usage data we look at is anonymized and not possible to tie back to individual users or clients — privacy is of our highest concern. In addition, usage data never includes the actual data points or analysis our users are looking at. The same goes for all of the data we are collecting through testing and interview — any results coming from tests and interviews are anonymized.

Let’s Get in Touch

We collaborate with UX teams in other companies, participate and present in meetups on the topic, and have internal, bi-weekly knowledge-sharing sessions. We’re always interested in getting in touch with UX teams in the industry for collaboration, so contact us at info@recordedfuture.com if you’re interested in continuing the conversation! And to learn more about Recorded Future, request a personalized demo today.

Related