I Have No Mouth, and I Must Do Crime

Deepfake voice cloning technology is an emerging risk to organizations, representing the evolution in the convergence of artificial intelligence (AI) threats. When leveraged in conjunction with other AI technologies — such as deepfake video technology, text-based large language models (“LLMs”, such as GPT), generative art, and others — the potential for impact increases. Voice cloning technology is currently being abused by threat actors in the wild. It has been shown to be capable of defeating voice-based multi-factor authentication (MFA), enabling the spread of misinformation and disinformation, and increasing the effectiveness of social engineering.

As outlined in our January 26, 2023, report “I, Chatbot”, open-source or “freemium” AI platforms lower the barrier to entry for low-skilled and inexperienced threat actors seeking to break into cybercrime. These platforms’ ease-of-use and “out-of-the-box” functionality enable threat actors to streamline and automate cybercriminal tasks that they may not be equipped to act upon otherwise. One of the most popular voice cloning platforms on the market is ElevenLabs (elevenlabs[.]io), a browser-based text-to-speech (T2S; TTS) software that allows users to upload “custom” voice samples for a premium fee. Voice cloning technologies, such as ElevenLabs, lower the barrier to entry for inexperienced English-speaking cybercriminals seeking to engage in low-risk impersonation schemes and provide opportunities for more sophisticated actors to undertake high-impact fraud. Threat actors have begun to monetize voice cloning services, including developing their own cloning tools that are available for purchase on Telegram, leading to the emergence of voice-cloning-as-a-service (VCaaS).

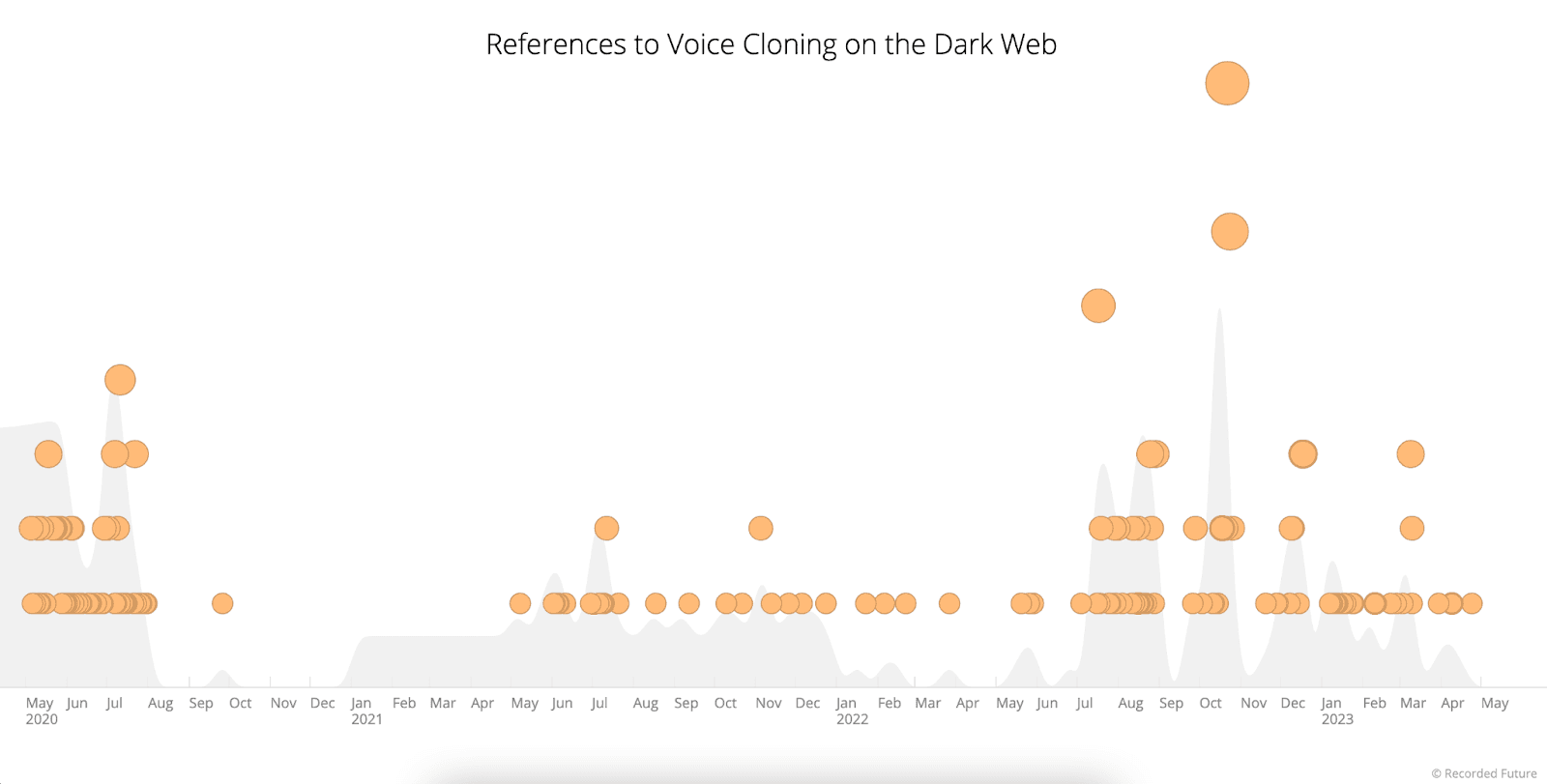

References to voice cloning on dark web sources significantly increase from May 2020 to May 2023

References to voice cloning on dark web sources significantly increase from May 2020 to May 2023

Voice cloning samples that surface on social media, messaging platforms, and dark web sources often leverage the voices of public figures — such as celebrities, politicians, and internet personalities (“influencers”) — and are intended to create either comedic or malicious content. This content, which is often racist, discriminatory, or violent by nature, enables the spread of disinformation, as users on social media are sometimes deceived by the high quality of the voice cloning sample.

In order to mitigate current and future threats, organizations must address the risks associated with voice cloning while such technologies are in their infancy. Risk mitigation strategies need to be multidisciplinary, addressing the root causes of social engineering, phishing and vishing, disinformation, and more. Voice cloning technology is still leveraged by humans with specific intentions — it does not conduct attacks on its own. Therefore, adopting a framework that educates employees, users, and customers about the threats it poses will be more effective in the short-term than fighting abuse of the technology itself — which should be a long-term strategic goal.

To read the entire analysis with endnotes, click here to download the report as a PDF.

Related