Anticipating Surprise: Using Indications, Indicators, and Evidence for Attack Preparation

Key Takeaways

- Anticipating and mitigating surprises to your organization is the primary function of threat intelligence.

- Warnings of surprise come as either strategic or tactical intelligence.

- Understanding the differences between analytical information types can focus your threat intelligence strategy and ensure you have the resources to adequately defend your networks and business.

Intelligence is a big business.

From the CIA and NSA to business intelligence and competitive intelligence to threat intelligence, business uses of intelligence draw from the marquee and mystique of a process that can seem secretive, exclusive, and inaccessible.

This mystique can blind business leaders to believe intelligence processes will eliminate business risk. Unfortunately, this view can lead to the opposite of intelligence — surprise.1

The Impact of Surprise

In 2012-2013, virtually no one noticed a professional basketball player shooting more three-pointers than anyone else. Within basketball, three-point shots were a statistical anomaly. The easiest way to score points is driving the ball to the basket.

Tactically, a single player shooting more three-point shots than any other was viewed by many as an outlier to an anomalous category.

Strategically, however, the game was shifting away from dunks to range. Three-point shots as a primary offensive scoring tool has advantages that other teams were not evaluating, such as less defensive coverage. Noticing the environmental changes missed by others is one benefit of applied intelligence.

That shooter was Stephen Curry, who last week capped off the NBA record for three-point shots in a season at 402. He led the Golden State Warriors, a team which set the NBA record for most wins in a season, at 73, and the NBA record for most three-point shots, at 1,077.

That’s the impact of strategic surprise.

Unfortunately, in cyber security, the game can change against your favor, allowing new threats to arise and surprise your organization.

Organizational surprise means an information-gathering system has ultimately failed on one of two levels: tactical or strategic.

Tactical surprise is the failure of tactical warning; it is an unexpected, catastrophic attack by an adversary, the likes of Pearl Harbor or the Al Qaeda attacks on 9/11. Strategic surprise is a systemic failure to prepare for potential adversarial actions.

The overarching goal of threat intelligence is to protect the infrastructure.

Tactical warning aims to counter imminent threat; strategic warning aims to arm decision makers with the information to direct security resources to key business areas and minimize the impact of inevitable attacks.

The classic discussion of strategic versus tactical surprise comes from an analysis of the September 11 attack by the CIA’s Sherman Kent Center for Intelligence Analysis:

The terrorist attack of 11 September 2001 is fairly represented in open source commentary as a tactical surprise … Judging whether there was a failure of strategic as well as tactical warning is a more difficult task … Evidence on the public record indicates that intelligence communicated clearly and often in the months before 11 September the judgment that the likelihood of a major Al Qaeda terrorist attack within the United States was high and rising … that many responsible policy officials had been convinced… that U.S. vulnerability to such attack had grown markedly. Both governmental and non-governmental studies … had begun to recommend national investment in numerous protective measures …

The bottom line? Even after taking account of inevitable hindsight bias that accompanies bureaucratic recollection of prescience of dramatic events, the public record indicates (1) strategic warning was given, (2) warning was received, (3) warning was believed. Yet commensurate protective measures were not taken.

While tactically surprising and catastrophic, these attacks were not necessarily a strategic surprise due to decision maker actions. The intelligence community recognized the threat from Al Qaeda in numerous reports, and decision makers in the executive branch dedicated long-term resources to increasing defenses against attacks.

Far too often in information security, the tragic aftermath of a cyber incident is a reflection of, not only the attack, but the lack of strategic security resources provided.

Intelligence Is Actionable

All intelligence processes aim to create actionable information. Action can occur from a tactical (immediate defense) or strategic (resource allocation) level.

In the realm of threat intelligence, tactical warning forces immediate resources to counter an imminent attack. The identification of ongoing and systematic attacks (and their corresponding indicators of compromise [IOCs]) on “neighboring” organizational networks within our business sector is an example of threat intelligence providing tactical warning.

Unfortunately, history shows that tactical surprise may be inevitable, particularly within information security. The volume and scale of attack complexity increases the likelihood of surprise.

Strategic surprise occurs when the intelligence capabilities fail to identify both environmental circumstances and/or developing tipping points. At the strategic level, the information about developing threats, or the insight gained from previous attacks, can guide resource allocation prior to the need to surge in the event of an imminent attack. Strategic warning relies on both comprehension of the overall threat environment and specific insight into developing threats.

Either action requires an understanding of three techniques used to identify specific threat intelligence elements.

What We Identify

In the scope of information security, analysts attempt to identify information of value through tasks. Threat intelligence analysts rely on three very close but often confused tasks.

The first task, triage, identifies indications; the second task, analysis, identifies indicators; the third task, recovery, identifies evidence.

1. Triage

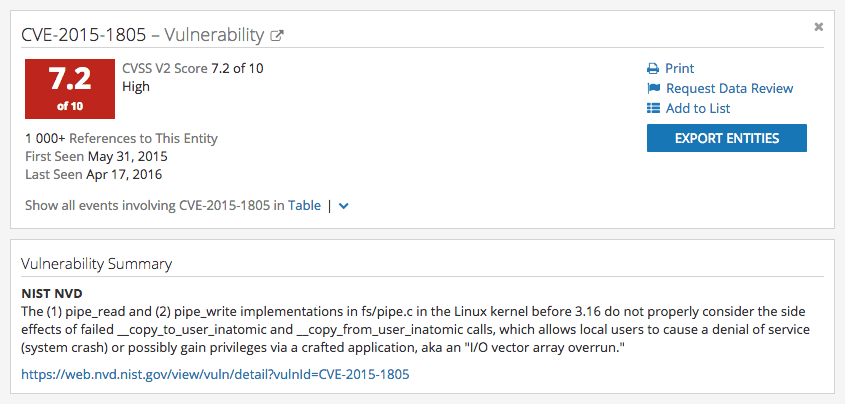

Indications may be “a sign, a symptom, a suggestion, or grounds for inferring or a basis for believing.” In the above instance, we have identified a vulnerability, CVE-2015-1805, which allows local users to gain privileges to a system. In and of itself, this vulnerability is dangerous, however it does not have particular context or applicability to a network without further evaluation; it’s an indication.

Many times when evaluating information, from political commentary to threat intelligence research, we’re looking for indications. Indications allow the threat intelligence analyst to identify and qualify beliefs on a recurring basis. Rather than a proven association, indications are broad and unsubstantial as a whole; “the sky is blue because I stepped outside, in clear conditions, and noticed a blue sky above me.”

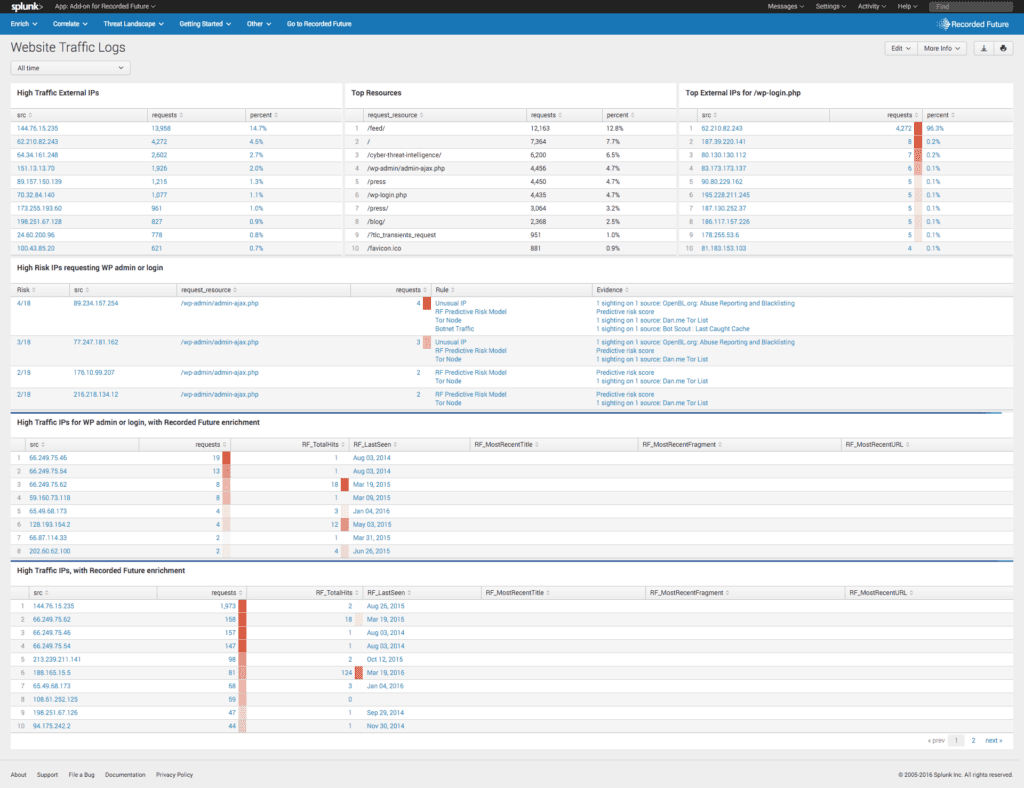

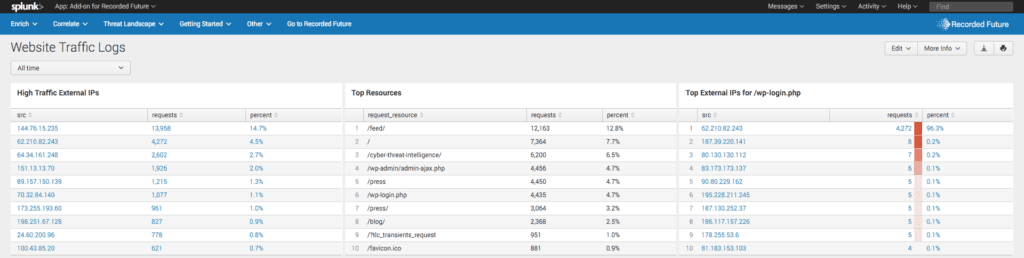

To place this in an information security context, a SIEM (security incident event management) like Splunk can also provide indications and environmental awareness regarding vulnerabilities and threats without associated actionability events, such as target selection or timing of attack.

2. Analysis

Though not conclusive in and of themselves, indicators are the “known or theoretical steps” an adversary should take in preparing to conduct an attack. Indicators are tied to a known step in the cyber kill chain methodolgy.

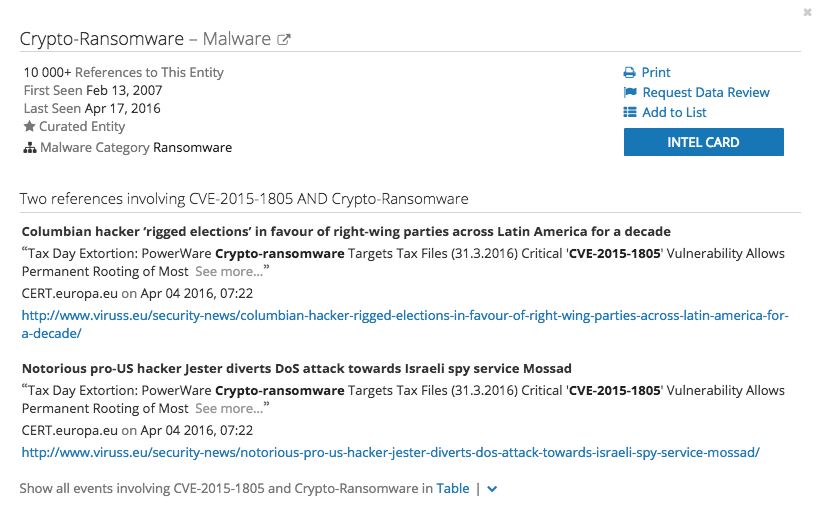

The identification of CVE-2015-1805 is an indication; the association of that vulnerability with a known adversarial methodology and target set, in this case crypto-ransomware used against individuals and businesses, is an indicator. The key to this process is being able to determine whether the information of value has a known causative or correlative relationship with an adversary or adversarial tool, typically through either origination of data or enrichment of existing data.

Fortunately, much of this workflow is automated either by origination of new data (TIPs) or enrichment of existing data (SIEM integrations).

Indicators collate various indications surrounding adversarial actions into the beginnings of a comprehensive threat picture. Strategic warning, however, requires a more thorough analysis of attackers, patterns of life, adversarial resources, and potential chokepoints.

In the above Splunk dashboard, you can see the Recorded Future integration enriching the automated initial collection of indications — IP addresses attempting connections — with the possible risk of those IP addresses being associated with known pathways for malware.

Of note, this activity alone is still triage; we are identifying the highest risk IP addresses for further analysis, but have not yet associated those addresses with known steps toward conducting an attack.

3. Recovery

Evidence is a collection of the body of known facts and information resulting from an action. Unlike indications or indicators, evidence occurs post incident. In the context of network security, evidence can be the result of an intrusion or breach and utilized to determine if there was a data loss incident, or the extent of the known data loss. In a less scary event, evidence can also be provided by researchers utilizing a malware analysis test environment.

Evidence is bad if it happens to you … but good if it happens elsewhere. With our example, evidence indicates most crypto-ransomware searches for and encrypts local and network files that have write permissions. One easy defensive action resulting from this discovery is write-protecting network files.

Strategically, evidence can provide the impetus to decision makers to allocate valuable resources to prepare and potentially surge to severe and surprising incidents. Evidence demonstrates the most immediate impacts on the business; communicating these impacts is a key strategic role of the threat intelligence team.

For the threat intelligence analyst, evidence provides the best glance into future.

Stuxnet was a anomalous event, a Black Swan, created by advanced, forward-looking, well-funded teams for a very specific use. Most of the followers are clones. Malware research teams are very skilled at identifying the pathways, domains, transmission methods, and other IOCs from active malware. From fully exploring current malware, we can identify commonalities, and possibly identify future trends.

One trend is the use of privacy infrastructures, like Tor, for staging of botnet command and control (C&C) servers. Notorious malware such as KeRanger, and the Nuclear exploit kit utilize Tor to, respectively, connect to C&C servers or to drop the malicious payload into the network.

The future points to malware like onion bots.

Onion bots are resilient, stealthy botnets utilizing privacy infrastructures to “evade detection, measurement, scale estimation, observation, and in general all IP-based current mitigation techniques.”

Understand the Difference

Don’t be surprised. Missed insights are more than just attacks.

Strategic surprise means you couldn’t respond to attacks because of missing resources, leading to slower incident response, greater recovery times, and higher business losses.

Understanding the differentiation between indications, indicators, and evidence — and the techniques to discover them — allows you to build a threat intelligence capability to fully investigate and discover threats, and thus avoid surprises.

Avoid surprise.

1 This article was inspired by the seminal book, _Anticipating Surprise_ by Cynthia Grabo. This book is a formerly classified CIA-published guide to understanding and implementing strategic warning analysis in an organization. By “known or theoretical step,” Grabo is referring to a predicted step in an adversarial methodology which is possible.but not yet observed.

Related